Stable count distribution

|

Probability density function  | |||

|

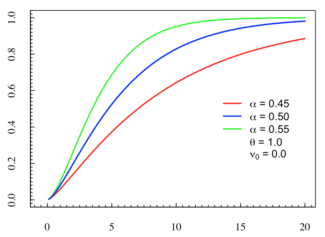

Cumulative distribution function  | |||

| Parameters |

[math]\displaystyle{ \alpha }[/math] ∈ (0, 1) — stability parameter | ||

|---|---|---|---|

| Support | x ∈ R and x ∈ [[math]\displaystyle{ \nu_0 }[/math], ∞) | ||

| [math]\displaystyle{ \mathfrak{N}_\alpha(x;\nu_0,\theta)= \frac{1}{\Gamma(\frac{1}{\alpha}+1)} \frac{1}{x-\nu_0} L_\alpha(\frac{\theta}{x-\nu_0}) }[/math] | |||

| CDF | integral form exists | ||

| Mean | [math]\displaystyle{ \frac{\Gamma(\frac{2}{\alpha})}{\Gamma(\frac{1}{\alpha})} }[/math] | ||

| Median | not analytically expressible | ||

| Mode | not analytically expressible | ||

| Variance | [math]\displaystyle{ \frac{\Gamma(\frac{3}{\alpha})}{2 \Gamma(\frac{1}{\alpha})} - \left[ \frac{\Gamma(\frac{2}{\alpha})}{\Gamma(\frac{1}{\alpha})} \right]^2 }[/math] | ||

| Skewness | TBD | ||

| Kurtosis | TBD | ||

| MGF | Fox-Wright representation exists | ||

In probability theory, the stable count distribution is the conjugate prior of a one-sided stable distribution. This distribution was discovered by Stephen Lihn (Chinese: 藺鴻圖) in his 2017 study of daily distributions of the S&P 500 and the VIX.[1] The stable distribution family is also sometimes referred to as the Lévy alpha-stable distribution, after Paul Lévy, the first mathematician to have studied it.[2]

Of the three parameters defining the distribution, the stability parameter [math]\displaystyle{ \alpha }[/math] is most important. Stable count distributions have [math]\displaystyle{ 0\lt \alpha\lt 1 }[/math]. The known analytical case of [math]\displaystyle{ \alpha=1/2 }[/math] is related to the VIX distribution (See Section 7 of [1]). All the moments are finite for the distribution.

Definition

Its standard distribution is defined as

- [math]\displaystyle{ \mathfrak{N}_\alpha(\nu)=\frac{1}{\Gamma(\frac{1}{\alpha}+1)} \frac{1}{\nu} L_\alpha\left(\frac{1}{\nu}\right), }[/math]

where [math]\displaystyle{ \nu\gt 0 }[/math] and [math]\displaystyle{ 0\lt \alpha\lt 1. }[/math]

Its location-scale family is defined as

- [math]\displaystyle{ \mathfrak{N}_\alpha(\nu;\nu_0,\theta)= \frac{1}{\Gamma(\frac{1}{\alpha}+1)} \frac{1}{\nu-\nu_0} L_\alpha\left(\frac{\theta}{\nu-\nu_0}\right), }[/math]

where [math]\displaystyle{ \nu\gt \nu_0 }[/math], [math]\displaystyle{ \theta\gt 0 }[/math], and [math]\displaystyle{ 0\lt \alpha\lt 1. }[/math]

In the above expression, [math]\displaystyle{ L_\alpha(x) }[/math] is a one-sided stable distribution,[3] which is defined as following.

Let [math]\displaystyle{ X }[/math] be a standard stable random variable whose distribution is characterized by [math]\displaystyle{ f(x;\alpha,\beta,c,\mu) }[/math], then we have

- [math]\displaystyle{ L_\alpha(x)=f(x;\alpha,1,\cos\left(\frac{\pi\alpha}{2}\right)^{1/\alpha},0), }[/math]

where [math]\displaystyle{ 0\lt \alpha\lt 1 }[/math].

Consider the Lévy sum [math]\displaystyle{ Y = \sum_{i=1}^N X_i }[/math] where [math]\displaystyle{ X_i\sim L_\alpha(x) }[/math], then [math]\displaystyle{ Y }[/math] has the density [math]\displaystyle{ \frac{1}{\nu} L_\alpha\left(\frac{x}{\nu}\right) }[/math] where [math]\displaystyle{ \nu=N^{1/\alpha} }[/math]. Set [math]\displaystyle{ x=1 }[/math], we arrive at [math]\displaystyle{ \mathfrak{N}_\alpha(\nu) }[/math] without the normalization constant.

The reason why this distribution is called "stable count" can be understood by the relation [math]\displaystyle{ \nu=N^{1/\alpha} }[/math]. Note that [math]\displaystyle{ N }[/math] is the "count" of the Lévy sum. Given a fixed [math]\displaystyle{ \alpha }[/math], this distribution gives the probability of taking [math]\displaystyle{ N }[/math] steps to travel one unit of distance.

Integral form

Based on the integral form of [math]\displaystyle{ L_\alpha(x) }[/math] and [math]\displaystyle{ q=\exp(-i\alpha\pi/2) }[/math], we have the integral form of [math]\displaystyle{ \mathfrak{N}_\alpha(\nu) }[/math] as

- [math]\displaystyle{ \begin{align} \mathfrak{N}_\alpha(\nu) & = \frac{2}{\pi\Gamma(\frac{1}{\alpha}+1)} \int_0^\infty e^{-\text{Re}(q)\,t^\alpha} \frac{1}{\nu} \sin(\frac{t}{\nu})\sin(-\text{Im}(q)\,t^\alpha) \,dt, \text{ or } \\ & = \frac{2}{\pi\Gamma(\frac{1}{\alpha}+1)} \int_0^\infty e^{-\text{Re}(q)\,t^\alpha} \frac{1}{\nu} \cos(\frac{t}{\nu})\cos(\text{Im}(q)\,t^\alpha) \,dt . \\ \end{align} }[/math]

Based on the double-sine integral above, it leads to the integral form of the standard CDF:

- [math]\displaystyle{ \begin{align} \Phi_\alpha(x) & = \frac{2}{\pi\Gamma(\frac{1}{\alpha}+1)} \int_0^x \int_0^\infty e^{-\text{Re}(q)\,t^\alpha} \frac{1}{\nu} \sin(\frac{t}{\nu})\sin(-\text{Im}(q)\,t^\alpha) \,dt\,d\nu \\ & = 1- \frac{2}{\pi\Gamma(\frac{1}{\alpha}+1)} \int_0^\infty e^{-\text{Re}(q)\,t^\alpha} \sin(-\text{Im}(q)\,t^\alpha) \,\text{Si}(\frac{t}{x}) \,dt, \\ \end{align} }[/math]

where [math]\displaystyle{ \text{Si}(x)=\int_0^x \frac{\sin(x)}{x}\,dx }[/math] is the sine integral function.

The Wright representation

In "Series representation", it is shown that the stable count distribution is a special case of the Wright function (See Section 4 of [4]):

- [math]\displaystyle{ \mathfrak{N}_\alpha(\nu) = \frac{1}{\Gamma\left( \frac{1}{\alpha}+1 \right)} W_{-\alpha,0}(-\nu^\alpha) , \, \text{where} \,\, W_{\lambda,\mu}(z) = \sum_{n=0}^\infty \frac{z^n}{n!\,\Gamma(\lambda n+\mu)}. }[/math]

This leads to the Hankel integral: (based on (1.4.3) of [5])

- [math]\displaystyle{ \mathfrak{N}_\alpha(\nu) = \frac{1}{\Gamma\left( \frac{1}{\alpha}+1 \right)} \frac{1}{2 \pi i} \int_{Ha} e^{t-(\nu t)^\alpha} \, dt, \, }[/math]where Ha represents a Hankel contour.

Alternative derivation – lambda decomposition

Another approach to derive the stable count distribution is to use the Laplace transform of the one-sided stable distribution, (Section 2.4 of [1])

- [math]\displaystyle{ \int_0^\infty e^{-z x} L_\alpha(x) \, dx = e^{-z^\alpha}, }[/math]where [math]\displaystyle{ 0\lt \alpha\lt 1 }[/math].

Let [math]\displaystyle{ x=1/\nu }[/math], and one can decompose the integral on the left hand side as a product distribution of a standard Laplace distribution and a standard stable count distribution,

- [math]\displaystyle{ \frac{1}{2} \frac{1}{\Gamma(\frac{1}{\alpha}+1)} e^{-|z|^\alpha} = \int_0^\infty \frac{1}{\nu} \left( \frac{1}{2} e^{-|z|/\nu} \right) \left(\frac{1}{\Gamma(\frac{1}{\alpha}+1)} \frac{1}{\nu} L_\alpha \left( \frac{1}{\nu} \right) \right) \, d\nu = \int_0^\infty \frac{1}{\nu} \left( \frac{1}{2} e^{-|z|/\nu} \right) \mathfrak{N}_\alpha(\nu) \, d\nu , }[/math]

where [math]\displaystyle{ z \in \mathsf{R} }[/math].

This is called the "lambda decomposition" (See Section 4 of [1]) since the LHS was named as "symmetric lambda distribution" in Lihn's former works. However, it has several more popular names such as "exponential power distribution", or the "generalized error/normal distribution", often referred to when [math]\displaystyle{ \alpha\gt 1 }[/math]. It is also the Weibull survival function in Reliability engineering.

Lambda decomposition is the foundation of Lihn's framework of asset returns under the stable law. The LHS is the distribution of asset returns. On the RHS, the Laplace distribution represents the lepkurtotic noise, and the stable count distribution represents the volatility.

Stable Vol distribution

A variant of the stable count distribution is called the stable vol distribution [math]\displaystyle{ V_{\alpha}(s) }[/math]. The Laplace transform of [math]\displaystyle{ e^{-|z|^\alpha} }[/math] can be re-expressed in terms of a Gaussian mixture of [math]\displaystyle{ V_{\alpha}(s) }[/math] (See Section 6 of [4]). It is derived from the lambda decomposition above by a change of variable such that

- [math]\displaystyle{ \frac{1}{2} \frac{1}{\Gamma(\frac{1}{\alpha}+1)} e^{-|z|^\alpha} = \frac{1}{2} \frac{1}{\Gamma(\frac{1}{\alpha}+1)} e^{-(z^2)^{\alpha/2}} = \int_0^\infty \frac{1}{s} \left( \frac{1}{\sqrt{2 \pi}} e^{-\frac{1}{2} (z/s)^2} \right) V_{\alpha}(s) \, ds , }[/math]

where

- [math]\displaystyle{ \begin{align} V_{\alpha}(s) &= \displaystyle \frac{\sqrt{2 \pi} \,\Gamma(\frac{2}{\alpha}+1)}{\Gamma(\frac{1}{\alpha}+1)} \, \mathfrak{N}_{\frac{\alpha}{2}}(2 s^2), \,\, 0 \lt \alpha \leq 2 \\ &= \displaystyle \frac{ \sqrt{2\pi} }{ \Gamma(\frac{1}{\alpha}+1) } \, W_{-\frac{\alpha}{2},0} \left( -{(\sqrt{2} s)}^\alpha \right) \end{align} }[/math]

This transformation is named generalized Gauss transmutation since it generalizes the Gauss-Laplace transmutation, which is equivalent to [math]\displaystyle{ V_{1}(s) = 2 \sqrt{2 \pi} \, \mathfrak{N}_{\frac{1}{2}}(2 s^2) = s \, e^{-s^2/2} }[/math].

Connection to Gamma and Poisson distributions

The shape parameter of the Gamma and Poisson Distributions is connected to the inverse of Lévy's stability parameter [math]\displaystyle{ 1/\alpha }[/math]. The upper regularized gamma function [math]\displaystyle{ Q(s,x) }[/math] can be expressed as an incomplete integral of [math]\displaystyle{ e^{-{u^\alpha}} }[/math] as

[math]\displaystyle{ Q(\frac{1}{\alpha}, z^\alpha) = \frac{1}{\Gamma(\frac{1}{\alpha}+1)} \displaystyle\int_z^\infty e^{-{u^\alpha}} \, du. }[/math]

By replacing [math]\displaystyle{ e^{-{u^\alpha}} }[/math] with the decomposition and carrying out one integral, we have:

[math]\displaystyle{

Q(\frac{1}{\alpha}, z^\alpha) =

\displaystyle\int_z^\infty \, du

\displaystyle\int_0^\infty

\frac{1}{\nu} \left( e^{-u/\nu} \right)

\, \mathfrak{N}_{\alpha}\left(\nu\right) \, d\nu

= \displaystyle\int_0^\infty

\left( e^{-z/\nu} \right)

\, \mathfrak{N}_{\alpha}\left(\nu\right) \, d\nu.

}[/math]

Reverting [math]\displaystyle{ (\frac{1}{\alpha}, z^\alpha) }[/math] back to [math]\displaystyle{ (s,x) }[/math], we arrive at the decomposition of [math]\displaystyle{ Q(s,x) }[/math] in terms of a stable count:

[math]\displaystyle{ Q(s,x) = \displaystyle\int_0^\infty e^{\left( -{x^s}/{\nu} \right)} \, \mathfrak{N}_{{1}/{s}}\left(\nu\right) \, d\nu. \,\, (s \gt 1) }[/math]

Differentiate [math]\displaystyle{ Q(s,x) }[/math] by [math]\displaystyle{ x }[/math], we arrive at the desired formula:

- [math]\displaystyle{ \begin{align} \frac{1}{\Gamma(s)} x^{s-1} e^{-x} & = \displaystyle\int_0^\infty \frac{1}{\nu} \left[ s\, x^{s-1} e^{\left( -{x^s}/{\nu} \right)} \right] \, \mathfrak{N}_{{1}/{s}}\left(\nu\right) \, d\nu \\ & = \displaystyle\int_0^\infty \frac{1}{t} \left[ s\, {\left( \frac{x}{t} \right)}^{s-1} e^{-{\left( x/t \right)}^s} \right] \, \left[ \mathfrak{N}_{{1}/{s}}\left(t^s\right) \, s \, t^{s-1} \right] \, dt \,\,\, (\nu = t^s) \\ & = \displaystyle\int_0^\infty \frac{1}{t} \, \text{Weibull}\left( \frac{x}{t}; s\right) \, \left[ \mathfrak{N}_{{1}/{s}}\left(t^s\right) \, s \, t^{s-1} \right] \, dt \end{align} }[/math]

This is in the form of a product distribution. The term [math]\displaystyle{ \left[ s\, {\left( \frac{x}{t} \right)}^{s-1} e^{-{\left( x/t \right)}^s} \right] }[/math] in the RHS is associated with a Weibull distribution of shape [math]\displaystyle{ s }[/math]. Hence, this formula connects the stable count distribution to the probability density function of a Gamma distribution (here) and the probability mass function of a Poisson distribution (here, [math]\displaystyle{ s \rightarrow s+1 }[/math]). And the shape parameter [math]\displaystyle{ s }[/math] can be regarded as inverse of Lévy's stability parameter [math]\displaystyle{ 1/\alpha }[/math].

Connection to Chi and Chi-squared distributions

The degrees of freedom [math]\displaystyle{ k }[/math] in the chi and chi-squared Distributions can be shown to be related to [math]\displaystyle{ 2/\alpha }[/math]. Hence, the original idea of viewing [math]\displaystyle{ \lambda = 2/\alpha }[/math] as an integer index in the lambda decomposition is justified here.

For the chi-squared distribution, it is straightforward since the chi-squared distribution is a special case of the gamma distribution, in that [math]\displaystyle{ \chi^2_k \sim \text{Gamma} \left(\frac{k}{2}, \theta=2 \right) }[/math]. And from above, the shape parameter of a gamma distribution is [math]\displaystyle{ 1/\alpha }[/math].

For the chi distribution, we begin with its CDF [math]\displaystyle{ P \left( \frac{k}2, \frac{x^2}2 \right) }[/math], where [math]\displaystyle{ P(s,x) = 1 - Q(s,x) }[/math]. Differentiate [math]\displaystyle{ P \left( \frac{k}2, \frac{x^2}2 \right) }[/math] by [math]\displaystyle{ x }[/math] , we have its density function as

- [math]\displaystyle{ \begin{align} \chi_k(x) = \frac{x^{k-1} e^{-x^2/2}} {2^{\frac{k}2-1} \Gamma \left( \frac{k}2 \right)} & = \displaystyle\int_0^\infty \frac{1}{\nu} \left[ 2^{-\frac{k}2} \,k \, x^{k-1} e^{\left( -2^{-\frac{k}2} \, {x^k}/{ \nu} \right)} \right] \, \mathfrak{N}_{\frac{2}{k}}\left(\nu\right) \, d\nu \\ & = \displaystyle\int_0^\infty \frac{1}{t} \left[ k\, {\left( \frac{x}{t} \right)}^{k-1} e^{-{\left( x/t \right)}^k} \right] \, \left[ \mathfrak{N}_{\frac{2}{k}}\left( 2^{-\frac{k}2} t^k \right) \, 2^{-\frac{k}2} \, k \, t^{k-1} \right] \, dt, \,\,\, (\nu = 2^{-\frac{k}2} t^k) \\ & = \displaystyle\int_0^\infty \frac{1}{t} \, \text{Weibull}\left( \frac{x}{t}; k\right) \, \left[ \mathfrak{N}_{\frac{2}{k}}\left( 2^{-\frac{k}2} t^k \right) \, 2^{-\frac{k}2} \, k \, t^{k-1} \right] \, dt \end{align} }[/math]

This formula connects [math]\displaystyle{ 2/k }[/math] with [math]\displaystyle{ \alpha }[/math] through the [math]\displaystyle{ \mathfrak{N}_{\frac{2}{k}}\left( \cdot \right) }[/math] term.

Connection to generalized Gamma distributions

The generalized gamma distribution is a probability distribution with two shape parameters, and is the super set of the gamma distribution, the Weibull distribution, the exponential distribution, and the half-normal distribution. Its CDF is in the form of [math]\displaystyle{ P(s, x^c) = 1 - Q(s, x^c) }[/math]. (Note: We use [math]\displaystyle{ s }[/math] instead of [math]\displaystyle{ a }[/math] for consistency and to avoid confusion with [math]\displaystyle{ \alpha }[/math].) Differentiate [math]\displaystyle{ P(s,x^c) }[/math] by [math]\displaystyle{ x }[/math], we arrive at the product-distribution formula:

- [math]\displaystyle{ \begin{align} \text{GenGamma}(x; s, c) & = \displaystyle\int_0^\infty \frac{1}{t} \, \text{Weibull}\left( \frac{x}{t}; sc\right) \, \left[ \mathfrak{N}_{\frac{1}{s}}\left(t^{sc}\right) \, sc \, t^{sc-1} \right] \, dt \,\, (s \geq 1) \end{align} }[/math]

where [math]\displaystyle{ \text{GenGamma}(x; s, c) }[/math] denotes the PDF of a generalized gamma distribution, whose CDF is parametrized as [math]\displaystyle{ P(s,x^c) }[/math]. This formula connects [math]\displaystyle{ 1/s }[/math] with [math]\displaystyle{ \alpha }[/math] through the [math]\displaystyle{ \mathfrak{N}_{\frac{1}{s}}\left( \cdot \right) }[/math] term. The [math]\displaystyle{ sc }[/math] term is an exponent representing the second degree of freedom in the shape-parameter space.

This formula is singular for the case of a Weibull distribution since [math]\displaystyle{ s }[/math] must be one for [math]\displaystyle{ \text{GenGamma}(x; 1, c) = \text{Weibull}(x; c) }[/math]; but for [math]\displaystyle{ \mathfrak{N}_{\frac{1}{s}}\left(\nu\right) }[/math] to exist, [math]\displaystyle{ s }[/math] must be greater than one. When [math]\displaystyle{ s\rightarrow 1 }[/math], [math]\displaystyle{ \mathfrak{N}_{\frac{1}{s}}\left(\nu\right) }[/math] is a delta function and this formula becomes trivial. The Weibull distribution has its distinct way of decomposition as following.

Connection to Weibull distribution

For a Weibull distribution whose CDF is [math]\displaystyle{ F(x;k,\lambda) = 1 - e^{-(x/\lambda)^k} \,\, (x\gt 0) }[/math], its shape parameter [math]\displaystyle{ k }[/math] is equivalent to Lévy's stability parameter [math]\displaystyle{ \alpha }[/math].

A similar expression of product distribution can be derived, such that the kernel is either a one-sided Laplace distribution [math]\displaystyle{ F(x;1,\sigma) }[/math] or a Rayleigh distribution [math]\displaystyle{ F(x;2,\sqrt{2} \sigma) }[/math]. It begins with the complementary CDF, which comes from Lambda decomposition:

- [math]\displaystyle{ 1-F(x;k,1) = \begin{cases} \displaystyle\int_0^\infty \frac{1}{\nu} \, (1-F(x;1,\nu)) \left[ \Gamma \left( \frac{1}{k}+1 \right) \mathfrak{N}_k(\nu) \right] \, d\nu , & 1 \geq k \gt 0; \text{or } \\ \displaystyle\int_0^\infty \frac{1}{s} \, (1-F(x;2,\sqrt{2} s)) \left[ \sqrt{\frac{2}{\pi}} \, \Gamma \left( \frac{1}{k}+1 \right) V_k(s) \right] \, ds , & 2 \geq k \gt 0. \end{cases} }[/math]

By taking derivative on [math]\displaystyle{ x }[/math], we obtain the product distribution form of a Weibull distribution PDF [math]\displaystyle{ \text{Weibull}(x;k) }[/math] as

- [math]\displaystyle{ \text{Weibull}(x;k) = \begin{cases} \displaystyle\int_0^\infty \frac{1}{\nu} \, \text{Laplace}(\frac{x}{\nu}) \left[ \Gamma \left( \frac{1}{k}+1 \right) \frac{1}{\nu} \mathfrak{N}_k(\nu) \right] \, d\nu , & 1 \geq k \gt 0; \text{or } \\ \displaystyle\int_0^\infty \frac{1}{s} \, \text{Rayleigh}(\frac{x}{s}) \left[ \sqrt{\frac{2}{\pi}} \, \Gamma \left( \frac{1}{k}+1 \right) \frac{1}{s} V_k(s) \right] \, ds , & 2 \geq k \gt 0. \end{cases} }[/math]

where [math]\displaystyle{ \text{Laplace}(x) = e^{-x} }[/math] and [math]\displaystyle{ \text{Rayleigh}(x) = x e^{-x^2/2} }[/math]. it is clear that [math]\displaystyle{ k = \alpha }[/math] from the [math]\displaystyle{ \mathfrak{N}_k(\nu) }[/math] and [math]\displaystyle{ V_k(s) }[/math] terms.

Asymptotic properties

For stable distribution family, it is essential to understand its asymptotic behaviors. From,[3] for small [math]\displaystyle{ \nu }[/math],

- [math]\displaystyle{ \begin{align} \mathfrak{N}_\alpha(\nu) & \rightarrow B(\alpha) \,\nu^{\alpha}, \text{ for } \nu \rightarrow 0 \text{ and } B(\alpha)\gt 0. \\ \end{align} }[/math]

This confirms [math]\displaystyle{ \mathfrak{N}_\alpha(0)=0 }[/math].

For large [math]\displaystyle{ \nu }[/math],

- [math]\displaystyle{ \begin{align} \mathfrak{N}_\alpha(\nu) & \rightarrow \nu^{\frac{\alpha}{2(1-\alpha)}} e^{-A(\alpha) \,\nu^{\frac{\alpha}{1-\alpha}}}, \text{ for } \nu \rightarrow \infty \text{ and } A(\alpha)\gt 0. \\ \end{align} }[/math]

This shows that the tail of [math]\displaystyle{ \mathfrak{N}_\alpha(\nu) }[/math] decays exponentially at infinity. The larger [math]\displaystyle{ \alpha }[/math] is, the stronger the decay.

This tail is in the form of a generalized gamma distribution, where in its [math]\displaystyle{ f(x; a, d, p) }[/math] parametrization, [math]\displaystyle{ p = \frac{\alpha}{1-\alpha} }[/math], [math]\displaystyle{ a = A(\alpha)^{-1/p} }[/math], and [math]\displaystyle{ d = 1 + \frac{p}{2} }[/math]. Hence, it is equivalent to [math]\displaystyle{ \text{GenGamma}(\frac{x}{a}; s = \frac{1}{\alpha} -\frac{1}{2}, c = p) }[/math], whose CDF is parametrized as [math]\displaystyle{ P\left( s,\left( \frac{x}{a} \right)^c \right) }[/math].

Moments

The n-th moment [math]\displaystyle{ m_n }[/math] of [math]\displaystyle{ \mathfrak{N}_\alpha(\nu) }[/math] is the [math]\displaystyle{ -(n+1) }[/math]-th moment of [math]\displaystyle{ L_\alpha(x) }[/math]. All positive moments are finite. This in a way solves the thorny issue of diverging moments in the stable distribution. (See Section 2.4 of [1])

- [math]\displaystyle{ \begin{align} m_n & = \int_0^\infty \nu^n \mathfrak{N}_\alpha(\nu) d\nu = \frac{1}{\Gamma(\frac{1}{\alpha}+1)} \int_0^\infty \frac{1}{t^{n+1}} L_\alpha(t) \, dt. \\ \end{align} }[/math]

The analytic solution of moments is obtained through the Wright function:

- [math]\displaystyle{ \begin{align} m_n & = \frac{1}{\Gamma(\frac{1}{\alpha}+1)} \int_0^\infty \nu^{n} W_{-\alpha,0}(-\nu^\alpha) \, d\nu \\ & = \frac{\Gamma(\frac{n+1}{\alpha})}{\Gamma(n+1)\Gamma(\frac{1}{\alpha})}, \, n \geq -1. \\ \end{align} }[/math]

where [math]\displaystyle{ \int_0^\infty r^\delta W_{-\nu,\mu}(-r)\,dr = \frac{\Gamma(\delta+1)}{\Gamma(\nu\delta+\nu+\mu)} , \, \delta\gt -1,0\lt \nu\lt 1,\mu\gt 0. }[/math](See (1.4.28) of [5])

Thus, the mean of [math]\displaystyle{ \mathfrak{N}_\alpha(\nu) }[/math] is

- [math]\displaystyle{ m_1=\frac{\Gamma(\frac{2}{\alpha})}{\Gamma(\frac{1}{\alpha})} }[/math]

The variance is

- [math]\displaystyle{ \sigma^2= \frac{\Gamma(\frac{3}{\alpha})}{2\Gamma(\frac{1}{\alpha})} - \left[ \frac{\Gamma(\frac{2}{\alpha})}{\Gamma(\frac{1}{\alpha})} \right]^2 }[/math]

And the lowest moment is [math]\displaystyle{ m_{-1} = \frac{1}{\Gamma(\frac{1}{\alpha} + 1)} }[/math] by applying [math]\displaystyle{ \Gamma(\frac{x}{y}) \to y\Gamma(x) }[/math] when [math]\displaystyle{ x \to 0 }[/math].

The n-th moment of the stable vol distribution [math]\displaystyle{ V_\alpha(s) }[/math] is

- [math]\displaystyle{ \begin{align} m_n(V_\alpha) & = 2^{-\frac{n}{2}} \sqrt{\pi} \, \frac{\Gamma(\frac{n+1}{\alpha})}{\Gamma(\frac{n+1}{2}) \Gamma(\frac{1}{\alpha})}, \, n \geq -1. \end{align} }[/math]

Moment generating function

The MGF can be expressed by a Fox-Wright function or Fox H-function:

- [math]\displaystyle{ \begin{align} M_\alpha(s) & = \sum_{n=0}^\infty \frac{m_n\,s^n}{n!} = \frac{1}{\Gamma(\frac{1}{\alpha})} \sum_{n=0}^\infty \frac{\Gamma(\frac{n+1}{\alpha})\,s^n}{\Gamma(n+1)^2} \\ & = \frac{1}{\Gamma(\frac{1}{\alpha})} {}_1\Psi_1\left[(\frac{1}{\alpha},\frac{1}{\alpha});(1,1); s\right] ,\,\,\text{or} \\ & = \frac{1}{\Gamma(\frac{1}{\alpha})} H^{1,1}_{1,2}\left[-s \bigl| \begin{matrix} (1-\frac{1}{\alpha}, \frac{1}{\alpha}) \\ (0,1);(0,1) \end{matrix} \right] \\ \end{align} }[/math]

As a verification, at [math]\displaystyle{ \alpha=\frac{1}{2} }[/math], [math]\displaystyle{ M_{\frac{1}{2}}(s) = (1-4s)^{-\frac{3}{2}} }[/math] (see below) can be Taylor-expanded to [math]\displaystyle{ {}_1\Psi_1\left[(2,2);(1,1); s\right] =\sum_{n=0}^\infty \frac{\Gamma(2n+2)\,s^n}{\Gamma(n+1)^2} }[/math] via [math]\displaystyle{ \Gamma(\frac{1}{2}-n) = \sqrt{\pi} \frac{(-4)^n n!}{(2n)!} }[/math].

Known analytical case – quartic stable count

When [math]\displaystyle{ \alpha=\frac{1}{2} }[/math], [math]\displaystyle{ L_{1/2}(x) }[/math] is the Lévy distribution which is an inverse gamma distribution. Thus [math]\displaystyle{ \mathfrak{N}_{1/2}(\nu;\nu_0,\theta) }[/math] is a shifted gamma distribution of shape 3/2 and scale [math]\displaystyle{ 4\theta }[/math],

- [math]\displaystyle{ \mathfrak{N}_{\frac{1}{2}}(\nu;\nu_0,\theta) = \frac{1}{4\sqrt{\pi}\theta^{3/2}} (\nu-\nu_0)^{1/2} e^{-(\nu-\nu_0)/4\theta}, }[/math]

where [math]\displaystyle{ \nu\gt \nu_0 }[/math], [math]\displaystyle{ \theta\gt 0 }[/math].

Its mean is [math]\displaystyle{ \nu_0+6\theta }[/math] and its standard deviation is [math]\displaystyle{ \sqrt{24}\theta }[/math]. This called "quartic stable count distribution". The word "quartic" comes from Lihn's former work on the lambda distribution[6] where [math]\displaystyle{ \lambda=2/\alpha=4 }[/math]. At this setting, many facets of stable count distribution have elegant analytical solutions.

The p-th central moments are [math]\displaystyle{ \frac{2 \Gamma(p+3/2)}{\Gamma(3/2)} 4^p\theta^p }[/math]. The CDF is [math]\displaystyle{ \frac{2}{\sqrt{\pi}} \gamma\left(\frac{3}{2}, \frac{\nu-\nu_0}{4\theta} \right) }[/math] where [math]\displaystyle{ \gamma(s,x) }[/math] is the lower incomplete gamma function. And the MGF is [math]\displaystyle{ M_{\frac{1}{2}}(s) = e^{s\nu_0}(1-4s\theta)^{-\frac{3}{2}} }[/math]. (See Section 3 of [1])

Special case when α → 1

As [math]\displaystyle{ \alpha }[/math] becomes larger, the peak of the distribution becomes sharper. A special case of [math]\displaystyle{ \mathfrak{N}_\alpha(\nu) }[/math] is when [math]\displaystyle{ \alpha\rightarrow1 }[/math]. The distribution behaves like a Dirac delta function,

- [math]\displaystyle{ \mathfrak{N}_{\alpha\to 1}(\nu) \to \delta(\nu-1), }[/math]

where [math]\displaystyle{ \delta(x) = \begin{cases} \infty, & \text{if }x=0 \\ 0, & \text{if }x\neq 0 \end{cases} }[/math], and [math]\displaystyle{ \int_{0_-}^{0_+} \delta(x) dx = 1 }[/math].

Likewise, the stable vol distribution at [math]\displaystyle{ \alpha \to 2 }[/math] also becomes a delta function,

- [math]\displaystyle{ V_{\alpha\to 2}(s) \to \delta(s- \frac{1}{\sqrt{2}}). }[/math]

Series representation

Based on the series representation of the one-sided stable distribution, we have:

- [math]\displaystyle{ \begin{align} \mathfrak{N}_\alpha(x) & = \frac{1}{\pi\Gamma(\frac{1}{\alpha}+1)} \sum_{n=1}^\infty\frac{-\sin(n(\alpha+1)\pi)}{n!}{x}^{\alpha n}\Gamma(\alpha n+1) \\ & = \frac{1}{\pi\Gamma(\frac{1}{\alpha}+1)} \sum_{n=1}^\infty\frac{(-1)^{n+1} \sin(n\alpha\pi)}{n!}{x}^{\alpha n}\Gamma(\alpha n+1) \\ \end{align} }[/math].

This series representation has two interpretations:

- First, a similar form of this series was first given in Pollard (1948),[7] and in "Relation to Mittag-Leffler function", it is stated that [math]\displaystyle{ \mathfrak{N}_\alpha(x) = \frac{\alpha^2 x^\alpha}{\Gamma \left(\frac{1}{\alpha}\right)} H_\alpha(x^\alpha), }[/math] where [math]\displaystyle{ H_\alpha(k) }[/math] is the Laplace transform of the Mittag-Leffler function [math]\displaystyle{ E_\alpha(-x) }[/math] .

- Secondly, this series is a special case of the Wright function [math]\displaystyle{ W_{\lambda,\mu}(z) }[/math]: (See Section 1.4 of [5])

- [math]\displaystyle{ \begin{align} \mathfrak{N}_\alpha(x) & = \frac{1}{\pi\Gamma(\frac{1}{\alpha}+1)} \sum_{n=1}^\infty\frac{(-1)^{n} {x}^{\alpha n}}{n!}\, \sin((\alpha n+1)\pi)\Gamma(\alpha n+1) \\ & = \frac{1}{\Gamma \left(\frac{1}{\alpha}+1\right)} W_{-\alpha,0}(-x^\alpha), \, \text{where} \,\, W_{\lambda,\mu}(z) = \sum_{n=0}^\infty \frac{z^n}{n!\,\Gamma(\lambda n+\mu)}, \lambda\gt -1. \\ \end{align} }[/math]

The proof is obtained by the reflection formula of the Gamma function: [math]\displaystyle{ \sin((\alpha n+1)\pi)\Gamma(\alpha n+1) = \pi/\Gamma(-\alpha n) }[/math], which admits the mapping: [math]\displaystyle{ \lambda=-\alpha,\mu=0,z=-x^\alpha }[/math] in [math]\displaystyle{ W_{\lambda,\mu}(z) }[/math]. The Wright representation leads to analytical solutions for many statistical properties of the stable count distribution and establish another connection to fractional calculus.

Applications

Stable count distribution can represent the daily distribution of VIX quite well. It is hypothesized that VIX is distributed like [math]\displaystyle{ \mathfrak{N}_{\frac{1}{2}}(\nu;\nu_0,\theta) }[/math] with [math]\displaystyle{ \nu_0=10.4 }[/math] and [math]\displaystyle{ \theta=1.6 }[/math] (See Section 7 of [1]). Thus the stable count distribution is the first-order marginal distribution of a volatility process. In this context, [math]\displaystyle{ \nu_0 }[/math] is called the "floor volatility". In practice, VIX rarely drops below 10. This phenomenon justifies the concept of "floor volatility". A sample of the fit is shown below:

One form of mean-reverting SDE for [math]\displaystyle{ \mathfrak{N}_{\frac{1}{2}}(\nu;\nu_0,\theta) }[/math] is based on a modified Cox–Ingersoll–Ross (CIR) model. Assume [math]\displaystyle{ S_t }[/math] is the volatility process, we have

- [math]\displaystyle{ dS_t = \frac{\sigma^2}{8\theta} (6\theta+\nu_0-S_t) \, dt + \sigma \sqrt{S_t-\nu_0} \, dW, }[/math]

where [math]\displaystyle{ \sigma }[/math] is the so-called "vol of vol". The "vol of vol" for VIX is called VVIX, which has a typical value of about 85.[8]

This SDE is analytically tractable and satisfies the Feller condition, thus [math]\displaystyle{ S_t }[/math] would never go below [math]\displaystyle{ \nu_0 }[/math]. But there is a subtle issue between theory and practice. There has been about 0.6% probability that VIX did go below [math]\displaystyle{ \nu_0 }[/math]. This is called "spillover". To address it, one can replace the square root term with [math]\displaystyle{ \sqrt{\max(S_t-\nu_0,\delta\nu_0)} }[/math], where [math]\displaystyle{ \delta\nu_0\approx 0.01 \, \nu_0 }[/math] provides a small leakage channel for [math]\displaystyle{ S_t }[/math] to drift slightly below [math]\displaystyle{ \nu_0 }[/math].

Extremely low VIX reading indicates a very complacent market. Thus the spillover condition, [math]\displaystyle{ S_t\lt \nu_0 }[/math], carries a certain significance - When it occurs, it usually indicates the calm before the storm in the business cycle.

Generation of Random Variables

As the modified CIR model above shows, it takes another input parameter [math]\displaystyle{ \sigma }[/math] to simulate sequences of stable count random variables. The mean-reverting stochastic process takes the form of

- [math]\displaystyle{ dS_t = \sigma^2 \mu_{\alpha}\left( \frac{S_t}{\theta} \right) \, dt + \sigma \sqrt{S_t} \, dW, }[/math]

which should produce [math]\displaystyle{ \{S_t\} }[/math] that distributes like [math]\displaystyle{ \mathfrak{N}_{\alpha}(\nu;\theta) }[/math] as [math]\displaystyle{ t \rightarrow \infty }[/math]. And [math]\displaystyle{ \sigma }[/math] is a user-specified preference for how fast [math]\displaystyle{ S_t }[/math] should change.

By solving the Fokker-Planck equation, the solution for [math]\displaystyle{ \mu_{\alpha}(x) }[/math] in terms of [math]\displaystyle{ \mathfrak{N}_{\alpha}(x) }[/math] is

- [math]\displaystyle{ \begin{array}{lcl} \mu_\alpha(x) & = & \displaystyle \frac{1}{2} \frac{\left( x {d \over dx} +1 \right) \mathfrak{N}_{\alpha}(x)}{\mathfrak{N}_{\alpha}(x)} \\ & = & \displaystyle \frac{1}{2} \left[ x {d \over dx} \left( \log \mathfrak{N}_{\alpha}(x) \right) +1 \right] \end{array} }[/math]

It can also be written as a ratio of two Wright functions,

- [math]\displaystyle{ \begin{array}{lcl} \mu_\alpha(x) & = & \displaystyle -\frac{1}{2} \frac{W_{-\alpha,-1}(-x^\alpha)}{\Gamma(\frac{1}{\alpha}+1) \, \mathfrak{N}_{\alpha}(x)} \\ & = & \displaystyle -\frac{1}{2} \frac{W_{-\alpha,-1}(-x^\alpha)}{W_{-\alpha,0}(-x^\alpha)} \end{array} }[/math]

When [math]\displaystyle{ \alpha = 1/2 }[/math], this process is reduced to the modified CIR model where [math]\displaystyle{ \mu_{1/2}(x) = \frac{1}{8} (6-x) }[/math]. This is the only special case where [math]\displaystyle{ \mu_\alpha(x) }[/math] is a straight line.

Likewise, if the asymptotic distribution is [math]\displaystyle{ V_{\alpha}(s) }[/math] as [math]\displaystyle{ t \rightarrow \infty }[/math], the [math]\displaystyle{ \mu_\alpha(x) }[/math] solution, denoted as [math]\displaystyle{ \mu(x; V_{\alpha}) }[/math] below, is

- [math]\displaystyle{ \begin{array}{lcl} \mu(x; V_{\alpha}) & = & \displaystyle - \frac{ W_{-\frac{\alpha}{2},-1}(-{(\sqrt{2} x)}^\alpha) }{ W_{-\frac{\alpha}{2},0}(-{(\sqrt{2} x)}^\alpha)} -\frac{1}{2} \end{array} }[/math]

When [math]\displaystyle{ \alpha = 1 }[/math], it is reduced to a quadratic polynomial: [math]\displaystyle{ \mu(x;V_1) = 1 - \frac{x^2}{2} }[/math].

Stable Extension of the CIR Model

By relaxing the rigid relation between the [math]\displaystyle{ \sigma^2 }[/math] term and the [math]\displaystyle{ \sigma }[/math] term above, the stable extension of the CIR model can be constructed as

- [math]\displaystyle{ dr_t = a \, \left[ \frac{8b}{6} \, \mu_{\alpha}\left( \frac{6}{b} r_t \right) \right] \, dt + \sigma \sqrt{r_t} \, dW, }[/math]

which is reduced to the original CIR model at [math]\displaystyle{ \alpha = 1/2 }[/math]: [math]\displaystyle{ dr_t = a \left( b - r_t \right) dt + \sigma \sqrt{r_t} \, dW }[/math]. Hence, the parameter [math]\displaystyle{ a }[/math] controls the mean-reverting speed, the location parameter [math]\displaystyle{ b }[/math] sets where the mean is, [math]\displaystyle{ \sigma }[/math] is the volatility parameter, and [math]\displaystyle{ \alpha }[/math] is the shape parameter for the stable law.

By solving the Fokker-Planck equation, the solution for the PDF [math]\displaystyle{ p(x) }[/math] at [math]\displaystyle{ r_\infty }[/math] is

- [math]\displaystyle{ \begin{array}{lcl} p(x) & \propto & \displaystyle \exp \left[ \int^{x} \frac{dx}{x} \left( 2 D \, \mu_{\alpha}\left( \frac{6}{b} x \right) - 1 \right) \right] , \text{ where } D = \frac{4ab}{3 \sigma^2} \\ & = & \displaystyle \mathfrak{N}_{\alpha}\left( \frac{6}{b} x \right) ^D \, x^{D-1} \end{array} }[/math]

To make sense of this solution, consider asymptotically for large [math]\displaystyle{ x }[/math], [math]\displaystyle{ p(x) }[/math]'s tail is still in the form of a generalized gamma distribution, where in its [math]\displaystyle{ f(x; a', d, p) }[/math] parametrization, [math]\displaystyle{ p = \frac{\alpha}{1-\alpha} }[/math], [math]\displaystyle{ a' = \frac{b}{6} (D\,A(\alpha))^{-1/p} }[/math], and [math]\displaystyle{ d = D \left( 1 + \frac{p}{2} \right) }[/math]. It is reduced to the original CIR model at [math]\displaystyle{ \alpha = 1/2 }[/math] where [math]\displaystyle{ p(x) \propto x^{d-1}e^{-x/a'} }[/math] with [math]\displaystyle{ d = \frac{2ab}{\sigma^2} }[/math] and [math]\displaystyle{ A(\alpha) = \frac{1}{4} }[/math]; hence [math]\displaystyle{ \frac{1}{a'} = \frac{6}{b} \left(\frac{D}{4}\right) = \frac{2a}{\sigma^2} }[/math].

Fractional calculus

Relation to Mittag-Leffler function

From Section 4 of,[9] the inverse Laplace transform [math]\displaystyle{ H_\alpha(k) }[/math] of the Mittag-Leffler function [math]\displaystyle{ E_\alpha(-x) }[/math] is ([math]\displaystyle{ k\gt 0 }[/math])

- [math]\displaystyle{ H_\alpha(k)= \mathcal{L}^{-1}\{E_\alpha(-x)\}(k) = \frac{2}{\pi} \int_0^\infty E_{2\alpha}(-t^2) \cos(kt) \,dt. }[/math]

On the other hand, the following relation was given by Pollard (1948),[7]

- [math]\displaystyle{ H_\alpha(k) = \frac{1}{\alpha} \frac{1}{k^{1+1/\alpha}} L_\alpha \left( \frac{1}{k^{1/\alpha}} \right). }[/math]

Thus by [math]\displaystyle{ k=\nu^\alpha }[/math], we obtain the relation between stable count distribution and Mittag-Leffter function:

- [math]\displaystyle{ \mathfrak{N}_\alpha(\nu) = \frac{\alpha^2 \nu^\alpha}{\Gamma \left(\frac{1}{\alpha}\right)} H_\alpha(\nu^\alpha). }[/math]

This relation can be verified quickly at [math]\displaystyle{ \alpha=\frac{1}{2} }[/math] where [math]\displaystyle{ H_{\frac{1}{2}}(k)=\frac{1}{\sqrt{\pi}} \,e^{-k^2/4} }[/math] and [math]\displaystyle{ k^2=\nu }[/math]. This leads to the well-known quartic stable count result:

- [math]\displaystyle{ \mathfrak{N}_{\frac{1}{2}}(\nu) = \frac{\nu^{1/2}}{4\,\Gamma (2)} \times \frac{1}{\sqrt{\pi}} \,e^{-\nu/4} = \frac{1}{4\,\sqrt{\pi}} \nu^{1/2}\,e^{-\nu/4}. }[/math]

Relation to time-fractional Fokker-Planck equation

The ordinary Fokker-Planck equation (FPE) is [math]\displaystyle{ \frac{\partial P_1(x,t)}{\partial t} = K_1\, \tilde{L}_{FP} P_1(x,t) }[/math], where [math]\displaystyle{ \tilde{L}_{FP} = \frac{\partial}{\partial x} \frac{F(x)}{T} + \frac{\partial^2}{\partial x^2} }[/math] is the Fokker-Planck space operator, [math]\displaystyle{ K_1 }[/math] is the diffusion coefficient, [math]\displaystyle{ T }[/math] is the temperature, and [math]\displaystyle{ F(x) }[/math] is the external field. The time-fractional FPE introduces the additional fractional derivative [math]\displaystyle{ \,_0D_t^{1-\alpha} }[/math] such that [math]\displaystyle{ \frac{\partial P_\alpha(x,t)}{\partial t} = K_\alpha \,_0D_t^{1-\alpha} \tilde{L}_{FP} P_\alpha(x,t) }[/math], where [math]\displaystyle{ K_\alpha }[/math] is the fractional diffusion coefficient.

Let [math]\displaystyle{ k=s/t^\alpha }[/math] in [math]\displaystyle{ H_\alpha(k) }[/math], we obtain the kernel for the time-fractional FPE (Eq (16) of [10])

- [math]\displaystyle{ n(s,t) = \frac{1}{\alpha} \frac{t}{s^{1+1/\alpha}} L_\alpha \left( \frac{t}{s^{1/\alpha}} \right) }[/math]

from which the fractional density [math]\displaystyle{ P_\alpha(x,t) }[/math] can be calculated from an ordinary solution [math]\displaystyle{ P_1(x,t) }[/math] via

- [math]\displaystyle{ P_\alpha(x,t) = \int_0^\infty n\left( \frac{s}{K},t\right) \,P_1(x,s) \,ds, \text{ where } K=\frac{K_\alpha}{K_1}. }[/math]

Since [math]\displaystyle{ n(\frac{s}{K},t)\,ds = \Gamma \left(\frac{1}{\alpha}+1\right) \frac{1}{\nu}\, \mathfrak{N}_\alpha(\nu; \theta=K^{1/\alpha}) \,d\nu }[/math] via change of variable [math]\displaystyle{ \nu t = s^{1/\alpha} }[/math], the above integral becomes the product distribution with [math]\displaystyle{ \mathfrak{N}_\alpha(\nu) }[/math], similar to the "lambda decomposition" concept, and scaling of time [math]\displaystyle{ t \Rightarrow (\nu t)^\alpha }[/math]:

- [math]\displaystyle{ P_\alpha(x,t) = \Gamma \left(\frac{1}{\alpha}+1\right) \int_0^\infty \frac{1}{\nu}\, \mathfrak{N}_\alpha(\nu; \theta=K^{1/\alpha}) \,P_1(x,(\nu t)^\alpha) \,d\nu. }[/math]

Here [math]\displaystyle{ \mathfrak{N}_\alpha(\nu; \theta=K^{1/\alpha}) }[/math] is interpreted as the distribution of impurity, expressed in the unit of [math]\displaystyle{ K^{1/\alpha} }[/math], that causes the anomalous diffusion.

See also

- Lévy flight

- Lévy process

- Fractional calculus

- Anomalous diffusion

- Incomplete gamma function and Gamma distribution

- Poisson distribution

References

- ↑ 1.0 1.1 1.2 1.3 1.4 1.5 1.6 Template:Cite SSRN

- ↑ Paul Lévy, Calcul des probabilités 1925

- ↑ 3.0 3.1 Penson, K. A.; Górska, K. (2010-11-17). "Exact and Explicit Probability Densities for One-Sided Lévy Stable Distributions". Physical Review Letters 105 (21): 210604. doi:10.1103/PhysRevLett.105.210604. PMID 21231282. Bibcode: 2010PhRvL.105u0604P.

- ↑ 4.0 4.1 Template:Cite SSRN

- ↑ 5.0 5.1 5.2 Mathai, A.M.; Haubold, H.J. (2017). Fractional and Multivariable Calculus. Springer Optimization and Its Applications. 122. Cham: Springer International Publishing. doi:10.1007/978-3-319-59993-9. ISBN 9783319599922.

- ↑ Lihn, Stephen H. T. (2017-01-26) (in en). From Volatility Smile to Risk Neutral Probability and Closed Form Solution of Local Volatility Function. Rochester, NY. doi:10.2139/ssrn.2906522.

- ↑ 7.0 7.1 Pollard, Harry (1948-12-01). "The completely monotonic character of the Mittag-Leffler function $E_a \left( { - x} \right)$" (in en). Bulletin of the American Mathematical Society 54 (12): 1115–1117. doi:10.1090/S0002-9904-1948-09132-7. ISSN 0002-9904.

- ↑ "DOUBLE THE FUN WITH CBOE's VVIX Index". https://cdn.cboe.com/resources/indices/documents/vvix-termstructure.pdf.

- ↑ Saxena, R. K.; Mathai, A. M.; Haubold, H. J. (2009-09-01). "Mittag-Leffler Functions and Their Applications". arXiv:0909.0230 [math.CA].

- ↑ Barkai, E. (2001-03-29). "Fractional Fokker-Planck equation, solution, and application" (in en). Physical Review E 63 (4): 046118. doi:10.1103/PhysRevE.63.046118. ISSN 1063-651X. PMID 11308923. Bibcode: 2001PhRvE..63d6118B.

External links

- R Package 'stabledist' by Diethelm Wuertz, Martin Maechler and Rmetrics core team members. Computes stable density, probability, quantiles, and random numbers. Updated Sept. 12, 2016.

|