Folded normal distribution

|

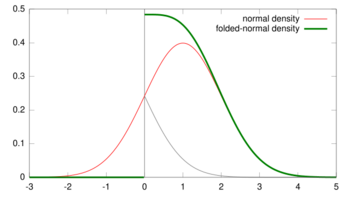

Probability density function  μ=1, σ=1 | |||

|

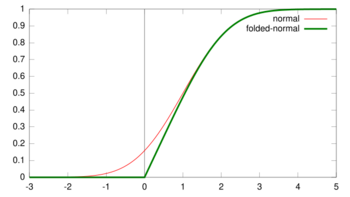

Cumulative distribution function  μ=1, σ=1 | |||

| Parameters |

μ ∈ R (location) σ2 > 0 (scale) | ||

|---|---|---|---|

| Support | x ∈ [0,∞) | ||

| [math]\displaystyle{ \frac{1}{\sigma\sqrt{2\pi}} \, e^{ -\frac{(x-\mu)^2}{2\sigma^2} } + \frac{1}{\sigma\sqrt{2\pi}} \, e^{ -\frac{(x+\mu)^2}{2\sigma^2} } }[/math] | |||

| CDF | [math]\displaystyle{ \frac{1}{2}\left[ \mbox{erf}\left(\frac{x+\mu}{\sigma\sqrt{2}}\right) + \mbox{erf}\left(\frac{x-\mu}{\sigma\sqrt{2}}\right)\right] }[/math] | ||

| Mean | [math]\displaystyle{ \mu_Y = \sigma \sqrt{\tfrac{2}{\pi}} \, e^{(-\mu^2/2\sigma^2)} + \mu \left(1 - 2\,\Phi(-\tfrac{\mu}{\sigma}) \right) }[/math] | ||

| Variance | [math]\displaystyle{ \sigma_Y^2 = \mu^2 + \sigma^2 - \mu_Y^2 }[/math] | ||

The folded normal distribution is a probability distribution related to the normal distribution. Given a normally distributed random variable X with mean μ and variance σ2, the random variable Y = |X| has a folded normal distribution. Such a case may be encountered if only the magnitude of some variable is recorded, but not its sign. The distribution is called "folded" because probability mass to the left of x = 0 is folded over by taking the absolute value. In the physics of heat conduction, the folded normal distribution is a fundamental solution of the heat equation on the half space; it corresponds to having a perfect insulator on a hyperplane through the origin.

Definitions

Density

The probability density function (PDF) is given by

- [math]\displaystyle{ f_Y(x;\mu,\sigma^2)= \frac{1}{\sqrt{2\pi\sigma^2}} \, e^{ -\frac{(x-\mu)^2}{2\sigma^2} } + \frac{1}{\sqrt{2\pi\sigma^2}} \, e^{ -\frac{(x+\mu)^2}{2\sigma^2} } }[/math]

for x ≥ 0, and 0 everywhere else. An alternative formulation is given by

- [math]\displaystyle{ f\left(x \right)=\sqrt{\frac{2}{\pi\sigma^2}}e^{-\frac{\left(x^2+\mu^2 \right)}{2\sigma^2}}\cosh{\left(\frac{\mu x}{\sigma^2}\right)} }[/math],

where cosh is the cosine Hyperbolic function. It follows that the cumulative distribution function (CDF) is given by:

- [math]\displaystyle{ F_Y(x; \mu, \sigma^2) = \frac{1}{2}\left[ \mbox{erf}\left(\frac{x+\mu}{\sqrt{2\sigma^2}}\right) + \mbox{erf}\left(\frac{x-\mu}{\sqrt{2\sigma^2}}\right)\right] }[/math]

for x ≥ 0, where erf() is the error function. This expression reduces to the CDF of the half-normal distribution when μ = 0.

The mean of the folded distribution is then

- [math]\displaystyle{ \mu_Y = \sigma \sqrt{\frac{2}{\pi}} \,\, \exp\left(\frac{-\mu^2}{2\sigma^2}\right) + \mu \, \mbox{erf}\left(\frac{\mu}{\sqrt{2\sigma^2}}\right) }[/math]

or

- [math]\displaystyle{ \mu_Y = \sqrt{\frac{2}{\pi}}\sigma e^{-\frac{\mu^2}{2\sigma^2}}+\mu\left[1-2\Phi\left(-\frac{\mu}{\sigma}\right) \right] }[/math]

where [math]\displaystyle{ \Phi }[/math] is the normal cumulative distribution function:

- [math]\displaystyle{ \Phi(x)\; =\; \frac12\left[1 + \operatorname{erf}\left(\frac{x}{\sqrt{2}}\right)\right]. }[/math]

The variance then is expressed easily in terms of the mean:

- [math]\displaystyle{ \sigma_Y^2 = \mu^2 + \sigma^2 - \mu_Y^2. }[/math]

Both the mean (μ) and variance (σ2) of X in the original normal distribution can be interpreted as the location and scale parameters of Y in the folded distribution.

Properties

Mode

The mode of the distribution is the value of [math]\displaystyle{ x }[/math] for which the density is maximised. In order to find this value, we take the first derivative of the density with respect to [math]\displaystyle{ x }[/math] and set it equal to zero. Unfortunately, there is no closed form. We can, however, write the derivative in a better way and end up with a non-linear equation

[math]\displaystyle{ \frac{df(x)}{dx}=0 \Rightarrow -\frac{\left(x-\mu\right)}{\sigma^2}e^{-\frac{1}{2}\frac{\left(x-\mu\right)^2}{\sigma^2}}- \frac{\left(x+\mu\right)}{\sigma^2}e^{-\frac{1}{2}\frac{\left(x+\mu\right)^2}{\sigma^2}}=0 }[/math]

[math]\displaystyle{ x\left[e^{-\frac{1}{2}\frac{\left(x-\mu\right)^2}{\sigma^2}}+e^{-\frac{1}{2}\frac{\left(x+\mu\right)^2}{\sigma^2}}\right]- \mu \left[e^{-\frac{1}{2}\frac{\left(x-\mu\right)^2}{\sigma^2}}-e^{-\frac{1}{2}\frac{\left(x+\mu\right)^2}{\sigma^2}}\right]=0 }[/math]

[math]\displaystyle{ x\left(1+e^{-\frac{2\mu x}{\sigma^2}}\right)-\mu\left(1-e^{-\frac{2\mu x}{\sigma^2}}\right)=0 }[/math]

[math]\displaystyle{ \left(\mu+x\right)e^{-\frac{2\mu x}{\sigma^2}}=\mu-x }[/math]

[math]\displaystyle{ x=-\frac{\sigma^2}{2\mu}\log{\frac{\mu-x}{\mu+x}} }[/math].

Tsagris et al. (2014) saw from numerical investigation that when [math]\displaystyle{ \mu\lt \sigma }[/math], the maximum is met when [math]\displaystyle{ x=0 }[/math], and when [math]\displaystyle{ \mu }[/math] becomes greater than [math]\displaystyle{ 3\sigma }[/math], the maximum approaches [math]\displaystyle{ \mu }[/math]. This is of course something to be expected, since, in this case, the folded normal converges to the normal distribution. In order to avoid any trouble with negative variances, the exponentiation of the parameter is suggested. Alternatively, you can add a constraint, such as if the optimiser goes for a negative variance the value of the log-likelihood is NA or something very small.

- The characteristic function is given by

[math]\displaystyle{ \varphi_x\left(t\right)=e^{\frac{-\sigma^2 t^2}{2}+i\mu t}\Phi\left(\frac{\mu}{\sigma}+i\sigma t \right) + e^{-\frac{\sigma^2 t^2}{2}-i\mu t}\Phi\left(-\frac{\mu}{\sigma}+i\sigma t \right) }[/math].

- The moment generating function is given by

[math]\displaystyle{ M_x\left(t\right)=\varphi_x\left(-it\right)=e^{\frac{\sigma^2 t^2}{2}+\mu t}\Phi\left(\frac{\mu}{\sigma}+\sigma t \right) + e^{\frac{\sigma^2 t^2}{2}-\mu t}\Phi\left(-\frac{\mu}{\sigma}+\sigma t \right) }[/math].

- The cumulant generating function is given by

[math]\displaystyle{ K_x\left(t\right)=\log{M_x\left(t\right)}= \left(\frac{\sigma^2t^2}{2}+\mu t\right) + \log{\left\lbrace 1-\Phi\left(-\frac{\mu}{\sigma}-\sigma t \right) + e^{\frac{\sigma^2 t^2}{2}-\mu t}\left[1-\Phi\left(\frac{\mu}{\sigma}-\sigma t \right) \right] \right\rbrace} }[/math].

- The Laplace transformation is given by

[math]\displaystyle{ E\left(e^{-tx}\right)=e^{\frac{\sigma^2t^2}{2}-\mu t}\left[1-\Phi\left(-\frac{\mu}{\sigma}+\sigma t \right) \right]+ e^{\frac{\sigma^2 t^2}{2}+\mu t}\left[1-\Phi\left(\frac{\mu}{\sigma}+\sigma t \right) \right] }[/math].

- The Fourier transform is given by

[math]\displaystyle{ \hat{f}\left(t\right)=\varphi_x\left(-2\pi t\right)= e^{\frac{-4\pi^2\sigma^2 t^2}{2}- i2\pi \mu t}\left[1-\Phi\left(-\frac{\mu}{\sigma}-i2\pi \sigma t \right) \right]+ e^{-\frac{4\pi^2 \sigma^2 t^2}{2}+i2\pi\mu t}\left[1-\Phi\left(\frac{\mu}{\sigma}-i2\pi \sigma t \right) \right] }[/math].

Related distributions

- When μ = 0, the distribution of Y is a half-normal distribution.

- The random variable (Y/σ)2 has a noncentral chi-squared distribution with 1 degree of freedom and noncentrality equal to (μ/σ)2.

- The folded normal distribution can also be seen as the limit of the folded non-standardized t distribution as the degrees of freedom go to infinity.

- There is a bivariate version developed by Psarakis and Panaretos (2001) as well as a multivariate version developed by Chakraborty and Chatterjee (2013).

- The Rice distribution is a multivariate generalization of the folded normal distribution.

- Modified half-normal distribution[1] with the pdf on [math]\displaystyle{ (0, \infty) }[/math] is given as [math]\displaystyle{ f(x)= \frac{2\beta^{\frac{\alpha}{2}} x^{\alpha-1} \exp(-\beta x^2+ \gamma x )}{\Psi{\left(\frac{\alpha}{2}, \frac{ \gamma}{\sqrt{\beta}}\right)}} }[/math], where [math]\displaystyle{ \Psi(\alpha,z)={}_1\Psi_1\left(\begin{matrix}\left(\alpha,\frac{1}{2}\right)\\(1,0)\end{matrix};z \right) }[/math] denotes the Fox–Wright Psi function.

Statistical Inference

Estimation of parameters

There are a few ways of estimating the parameters of the folded normal. All of them are essentially the maximum likelihood estimation procedure, but in some cases, a numerical maximization is performed, whereas in other cases, the root of an equation is being searched. The log-likelihood of the folded normal when a sample [math]\displaystyle{ x_i }[/math] of size [math]\displaystyle{ n }[/math] is available can be written in the following way

[math]\displaystyle{ l = -\frac{n}{2}\log{2\pi\sigma^2}+\sum_{i=1}^n\log{\left[e^{-\frac{\left(x_i-\mu\right)^2}{2\sigma^2}}+ e^{-\frac{\left(x_i+\mu\right)^2}{2\sigma^2}} \right] } }[/math]

[math]\displaystyle{ l = -\frac{n}{2}\log{2\pi\sigma^2}+\sum_{i=1}^n\log{\left[e^{-\frac{\left(x_i-\mu\right)^2}{2\sigma^2}} \left(1+e^{-\frac{\left(x_i+\mu\right)^2}{2\sigma^2}}e^{\frac{\left(x_i-\mu\right)^2}{2\sigma^2}}\right)\right]} }[/math]

[math]\displaystyle{ l = -\frac{n}{2}\log{2\pi\sigma^2}-\sum_{i=1}^n\frac{\left(x_i-\mu\right)^2}{2\sigma^2}+\sum_{i=1}^n\log{\left(1+e^{-\frac{2\mu x_i}{\sigma^2}} \right)} }[/math]

In R (programming language), using the package Rfast one can obtain the MLE really fast (command foldnorm.mle). Alternatively, the command optim or nlm will fit this distribution. The maximisation is easy, since two parameters ([math]\displaystyle{ \mu }[/math] and [math]\displaystyle{ \sigma^2 }[/math]) are involved. Note, that both positive and negative values for [math]\displaystyle{ \mu }[/math] are acceptable, since [math]\displaystyle{ \mu }[/math] belongs to the real line of numbers, hence, the sign is not important because the distribution is symmetric with respect to it. The next code is written in R

folded <- function(y) {

## y is a vector with positive data

n <- length(y) ## sample size

sy2 <- sum(y^2)

sam <- function(para, n, sy2) {

me <- para[1] ; se <- exp( para[2] )

f <- - n/2 * log(2/pi/se) + n * me^2 / 2 / se +

sy2 / 2 / se - sum( log( cosh( me * y/se ) ) )

f

}

mod <- optim( c( mean(y), sd(y) ), n = n, sy2 = sy2, sam, control = list(maxit = 2000) )

mod <- optim( mod$par, sam, n = n, sy2 = sy2, control = list(maxit = 20000) )

result <- c( -mod$value, mod$par[1], exp(mod$par[2]) )

names(result) <- c("log-likelihood", "mu", "sigma squared")

result

}The partial derivatives of the log-likelihood are written as

[math]\displaystyle{ \frac{\partial l}{\partial \mu} = \frac{\sum_{i=1}^n\left(x_i-\mu \right)}{\sigma^2}- \frac{2}{\sigma^2}\sum_{i=1}^n\frac{x_ie^{\frac{-2\mu x_i}{\sigma^2}}}{1+e^{\frac{-2\mu x_i}{\sigma^2}}} }[/math]

[math]\displaystyle{ \frac{\partial l}{\partial \mu} = \frac{\sum_{i=1}^n\left(x_i-\mu \right)}{\sigma^2}-\frac{2}{\sigma^2}\sum_{i=1}^n\frac{x_i}{1+e^{\frac{2\mu x_i}{\sigma^2}}} \ \ \text{and} }[/math]

[math]\displaystyle{ \frac{\partial l}{\partial \sigma^2} = -\frac{n}{2\sigma^2}+\frac{\sum_{i=1}^n\left(x_i-\mu \right)^2}{2\sigma^4}+ \frac{2\mu}{\sigma^4}\sum_{i=1}^n\frac{x_ie^{-\frac{2\mu x_i}{\sigma^2}}}{1+e^{-\frac{2\mu x_i}{\sigma^2}}} }[/math]

[math]\displaystyle{ \frac{\partial l}{\partial \sigma^2} = -\frac{n}{2\sigma^2}+\frac{\sum_{i=1}^n\left(x_i-\mu \right)^2}{2\sigma^4}+ \frac{2\mu}{\sigma^4}\sum_{i=1}^n\frac{x_i}{1+e^{\frac{2\mu x_i}{\sigma^2}}} }[/math].

By equating the first partial derivative of the log-likelihood to zero, we obtain a nice relationship

[math]\displaystyle{ \sum_{i=1}^n\frac{x_i}{1+e^{\frac{2\mu x_i}{\sigma^2}}}=\frac{\sum_{i=1}^n\left(x_i-\mu \right)}{2} }[/math].

Note that the above equation has three solutions, one at zero and two more with the opposite sign. By substituting the above equation, to the partial derivative of the log-likelihood w.r.t [math]\displaystyle{ \sigma^2 }[/math] and equating it to zero, we get the following expression for the variance

[math]\displaystyle{ \sigma^2=\frac{\sum_{i=1}^n\left(x_i-\mu\right)^2}{n}+\frac{2\mu\sum_{i=1}^n\left(x_i-\mu\right)}{n}=\frac{\sum_{i=1}^n\left(x_i^2-\mu^2\right)}{n}=\frac{\sum_{i=1}^nx_i^2}{n}-\mu^2 }[/math],

which is the same formula as in the normal distribution. A main difference here is that [math]\displaystyle{ \mu }[/math] and [math]\displaystyle{ \sigma^2 }[/math] are not statistically independent. The above relationships can be used to obtain maximum likelihood estimates in an efficient recursive way. We start with an initial value for [math]\displaystyle{ \sigma^2 }[/math] and find the positive root ([math]\displaystyle{ \mu }[/math]) of the last equation. Then, we get an updated value of [math]\displaystyle{ \sigma^2 }[/math]. The procedure is being repeated until the change in the log-likelihood value is negligible. Another easier and more efficient way is to perform a search algorithm. Let us write the last equation in a more elegant way

[math]\displaystyle{ 2\sum_{i=1}^n\frac{x_i}{1+e^{\frac{2\mu x_i}{\sigma^2}}}- \sum_{i=1}^n\frac{x_i\left(1+e^{\frac{2\mu x_i}{\sigma^2}}\right)}{1+e^{\frac{2\mu x_i}{\sigma^2}}}+n\mu = 0 }[/math]

[math]\displaystyle{ \sum_{i=1}^n\frac{x_i\left(1-e^{\frac{2\mu x_i}{\sigma^2}}\right)}{1+e^{\frac{2\mu x_i}{\sigma^2}}}+n\mu = 0 }[/math].

It becomes clear that the optimization the log-likelihood with respect to the two parameters has turned into a root search of a function. This of course is identical to the previous root search. Tsagris et al. (2014) spotted that there are three roots to this equation for [math]\displaystyle{ \mu }[/math], i.e. there are three possible values of [math]\displaystyle{ \mu }[/math] that satisfy this equation. The [math]\displaystyle{ -\mu }[/math] and [math]\displaystyle{ +\mu }[/math], which are the maximum likelihood estimates and 0, which corresponds to the minimum log-likelihood.

See also

- Folded cumulative distribution

- Half-normal distribution

- Modified half-normal distribution[1] with the pdf on [math]\displaystyle{ (0, \infty) }[/math] is given as [math]\displaystyle{ f(x)= \frac{2\beta^{\frac{\alpha}{2}} x^{\alpha-1} \exp(-\beta x^2+ \gamma x )}{\Psi{\left(\frac{\alpha}{2}, \frac{ \gamma}{\sqrt{\beta}}\right)}} }[/math], where [math]\displaystyle{ \Psi(\alpha,z)={}_1\Psi_1\left(\begin{matrix}\left(\alpha,\frac{1}{2}\right)\\(1,0)\end{matrix};z \right) }[/math] denotes the Fox–Wright Psi function.

- Truncated normal distribution

References

- ↑ 1.0 1.1 Sun, Jingchao; Kong, Maiying; Pal, Subhadip (22 June 2021). "The Modified-Half-Normal distribution: Properties and an efficient sampling scheme". Communications in Statistics - Theory and Methods 52 (5): 1591–1613. doi:10.1080/03610926.2021.1934700. ISSN 0361-0926. https://figshare.com/articles/journal_contribution/The_Modified-Half-Normal_distribution_Properties_and_an_efficient_sampling_scheme/14825266/1/files/28535884.pdf.

- Tsagris, M.; Beneki, C.; Hassani, H. (2014). "On the folded normal distribution". Mathematics 2 (1): 12–28. doi:10.3390/math2010012.

- "The Folded Normal Distribution". Technometrics 3 (4): 543–550. 1961. doi:10.2307/1266560.

- Johnson NL (1962). "The folded normal distribution: accuracy of the estimation by maximum likelihood". Technometrics 4 (2): 249–256. doi:10.2307/1266622.

- Nelson LS (1980). "The Folded Normal Distribution". J Qual Technol 12 (4): 236–238. doi:10.1080/00224065.1980.11980971.

- Elandt RC (1961). "The folded normal distribution: two methods of estimating parameters from moments". Technometrics 3 (4): 551–562. doi:10.2307/1266561.

- Lin PC (2005). "Application of the generalized folded-normal distribution to the process capability measures". Int J Adv Manuf Technol 26 (7–8): 825–830. doi:10.1007/s00170-003-2043-x.

- Psarakis, S.; Panaretos, J. (1990). "The folded t distribution". Communications in Statistics - Theory and Methods 19 (7): 2717–2734. doi:10.1080/03610929008830342.

- Psarakis, S.; Panaretos, J. (2001). "On some bivariate extensions of the folded normal and the folded-t distributions". Journal of Applied Statistical Science 10 (2): 119–136.

- Chakraborty, A. K.; Chatterjee, M. (2013). "On multivariate folded normal distribution". Sankhyā: The Indian Journal of Statistics, Series B 75 (1): 1–15.

External links

|