Large margin nearest neighbor

Large margin nearest neighbor (LMNN)[1] classification is a statistical machine learning algorithm for metric learning. It learns a pseudometric designed for k-nearest neighbor classification. The algorithm is based on semidefinite programming, a sub-class of convex optimization.

The goal of supervised learning (more specifically classification) is to learn a decision rule that can categorize data instances into pre-defined classes. The k-nearest neighbor rule assumes a training data set of labeled instances (i.e. the classes are known). It classifies a new data instance with the class obtained from the majority vote of the k closest (labeled) training instances. Closeness is measured with a pre-defined metric. Large margin nearest neighbors is an algorithm that learns this global (pseudo-)metric in a supervised fashion to improve the classification accuracy of the k-nearest neighbor rule.

Setup

The main intuition behind LMNN is to learn a pseudometric under which all data instances in the training set are surrounded by at least k instances that share the same class label. If this is achieved, the leave-one-out error (a special case of cross validation) is minimized. Let the training data consist of a data set [math]\displaystyle{ D=\{(\vec x_1,y_1),\dots,(\vec x_n,y_n)\}\subset R^d\times C }[/math], where the set of possible class categories is [math]\displaystyle{ C=\{1,\dots,c\} }[/math].

The algorithm learns a pseudometric of the type

- [math]\displaystyle{ d(\vec x_i,\vec x_j)=(\vec x_i-\vec x_j)^\top\mathbf{M}(\vec x_i-\vec x_j) }[/math].

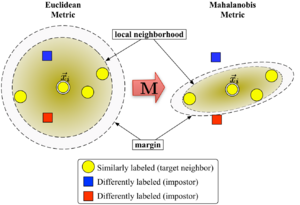

For [math]\displaystyle{ d(\cdot,\cdot) }[/math] to be well defined, the matrix [math]\displaystyle{ \mathbf{M} }[/math] needs to be positive semi-definite. The Euclidean metric is a special case, where [math]\displaystyle{ \mathbf{M} }[/math] is the identity matrix. This generalization is often (falsely[citation needed]) referred to as Mahalanobis metric.

Figure 1 illustrates the effect of the metric under varying [math]\displaystyle{ \mathbf{M} }[/math]. The two circles show the set of points with equal distance to the center [math]\displaystyle{ \vec x_i }[/math]. In the Euclidean case this set is a circle, whereas under the modified (Mahalanobis) metric it becomes an ellipsoid.

The algorithm distinguishes between two types of special data points: target neighbors and impostors.

Target neighbors

Target neighbors are selected before learning. Each instance [math]\displaystyle{ \vec x_i }[/math] has exactly [math]\displaystyle{ k }[/math] different target neighbors within [math]\displaystyle{ D }[/math], which all share the same class label [math]\displaystyle{ y_i }[/math]. The target neighbors are the data points that should become nearest neighbors under the learned metric. Let us denote the set of target neighbors for a data point [math]\displaystyle{ \vec x_i }[/math] as [math]\displaystyle{ N_i }[/math].

Impostors

An impostor of a data point [math]\displaystyle{ \vec x_i }[/math] is another data point [math]\displaystyle{ \vec x_j }[/math] with a different class label (i.e. [math]\displaystyle{ y_i\neq y_j }[/math]) which is one of the nearest neighbors of [math]\displaystyle{ \vec x_i }[/math]. During learning the algorithm tries to minimize the number of impostors for all data instances in the training set.

Algorithm

Large margin nearest neighbors optimizes the matrix [math]\displaystyle{ \mathbf{M} }[/math] with the help of semidefinite programming. The objective is twofold: For every data point [math]\displaystyle{ \vec x_i }[/math], the target neighbors should be close and the impostors should be far away. Figure 1 shows the effect of such an optimization on an illustrative example. The learned metric causes the input vector [math]\displaystyle{ \vec x_i }[/math] to be surrounded by training instances of the same class. If it was a test point, it would be classified correctly under the [math]\displaystyle{ k=3 }[/math] nearest neighbor rule.

The first optimization goal is achieved by minimizing the average distance between instances and their target neighbors

- [math]\displaystyle{ \sum_{i,j\in N_i} d(\vec x_i,\vec x_j) }[/math].

The second goal is achieved by penalizing distances to impostors [math]\displaystyle{ \vec x_l }[/math] that are less than one unit further away than target neighbors [math]\displaystyle{ \vec x_j }[/math] (and therefore pushing them out of the local neighborhood of [math]\displaystyle{ \vec x_i }[/math]). The resulting value to be minimized can be stated as:

- [math]\displaystyle{ \sum_{i,j \in N_i,l, y_l\neq y_i}[d(\vec x_i,\vec x_j)+1-d(\vec x_i,\vec x_l)]_{+} }[/math]

With a hinge loss function [math]\displaystyle{ [\cdot]_{+}=\max(\cdot,0) }[/math], which ensures that impostor proximity is not penalized when outside the margin. The margin of exactly one unit fixes the scale of the matrix [math]\displaystyle{ M }[/math]. Any alternative choice [math]\displaystyle{ c\gt 0 }[/math] would result in a rescaling of [math]\displaystyle{ M }[/math] by a factor of [math]\displaystyle{ 1/c }[/math].

The final optimization problem becomes:

- [math]\displaystyle{ \min_{\mathbf{M}} \sum_{i,j\in N_i} d(\vec x_i,\vec x_j) + \lambda\sum_{i,j,l} \xi_{ijl} }[/math]

- [math]\displaystyle{ \forall_{i,j \in N_i,l, y_l\neq y_i} }[/math]

- [math]\displaystyle{ d(\vec x_i,\vec x_j)+1-d(\vec x_i,\vec x_l)\leq \xi_{ijl} }[/math]

- [math]\displaystyle{ \xi_{ijl}\geq 0 }[/math]

- [math]\displaystyle{ \mathbf{M}\succeq 0 }[/math]

The hyperparameter [math]\displaystyle{ \lambda\gt 0 }[/math] is some positive constant (typically set through cross-validation). Here the variables [math]\displaystyle{ \xi_{ijl} }[/math] (together with two types of constraints) replace the term in the cost function. They play a role similar to slack variables to absorb the extent of violations of the impostor constraints. The last constraint ensures that [math]\displaystyle{ \mathbf{M} }[/math] is positive semi-definite. The optimization problem is an instance of semidefinite programming (SDP). Although SDPs tend to suffer from high computational complexity, this particular SDP instance can be solved very efficiently due to the underlying geometric properties of the problem. In particular, most impostor constraints are naturally satisfied and do not need to be enforced during runtime (i.e. the set of variables [math]\displaystyle{ \xi_{ijl} }[/math]is sparse). A particularly well suited solver technique is the working set method, which keeps a small set of constraints that are actively enforced and monitors the remaining (likely satisfied) constraints only occasionally to ensure correctness.

Extensions and efficient solvers

LMNN was extended to multiple local metrics in the 2008 paper.[2] This extension significantly improves the classification error, but involves a more expensive optimization problem. In their 2009 publication in the Journal of Machine Learning Research,[3] Weinberger and Saul derive an efficient solver for the semi-definite program. It can learn a metric for the MNIST handwritten digit data set in several hours, involving billions of pairwise constraints. An open source Matlab implementation is freely available at the authors web page.

Kumal et al.[4] extended the algorithm to incorporate local invariances to multivariate polynomial transformations and improved regularization.

See also

- Similarity learning

- Linear discriminant analysis

- Learning vector quantization

- Pseudometric space

- Nearest neighbor search

- Cluster analysis

- Data classification

- Data mining

- Machine learning

- Pattern recognition

- Predictive analytics

- Dimension reduction

- Neighbourhood components analysis

References

- ↑ Weinberger, K. Q.; Blitzer J. C.; Saul L. K. (2006). "Distance Metric Learning for Large Margin Nearest Neighbor Classification". Advances in Neural Information Processing Systems 18: 1473–1480. http://papers.nips.cc/paper/2795-distance-metric-learning-for-large-margin-nearest-neighbor-classification.

- ↑ Weinberger, K. Q.; Saul L. K. (2008). "Fast solvers and efficient implementations for distance metric learning". Proceedings of International Conference on Machine Learning: 1160–1167. http://research.yahoo.net/files/icml2008a.pdf. Retrieved 2010-07-14.

- ↑ Weinberger, K. Q.; Saul L. K. (2009). "Distance Metric Learning for Large Margin Classification". Journal of Machine Learning Research 10: 207–244. http://www.jmlr.org/papers/volume10/weinberger09a/weinberger09a.pdf.

- ↑ Kumar, M.P.; Torr P.H.S.; Zisserman A. (2007). "An Invariant Large Margin Nearest Neighbour Classifier". 2007 IEEE 11th International Conference on Computer Vision. pp. 1–8. doi:10.1109/ICCV.2007.4409041. ISBN 978-1-4244-1630-1.

External links

|