Automated Pain Recognition

Automated Pain Recognition (APR) is a method for objectively measuring pain and at the same time represents an interdisciplinary research area that comprises elements of medicine, psychology, psychobiology, and computer science. The focus is on computer-aided objective recognition of pain, implemented on the basis of machine learning.[1][2] Automated pain recognition allows for the valid, reliable detection and monitoring of pain in people who are unable to communicate verbally. The underlying machine learning processes are trained and validated in advance by means of unimodal or multimodal body signals. Signals used to detect pain may include facial expressions or gestures and may also be of a (psycho-)physiological or paralinguistic nature. To date, the focus has been on identifying pain intensity, but visionary efforts are also being made to recognize the quality, site, and temporal course of pain.[citation needed]

However, the clinical implementation of this approach is a controversial topic in the field of pain research. Critics of automated pain recognition argue that pain diagnosis can only be performed subjectively by humans.

Background

Pain diagnosis under conditions where verbal reporting is restricted - such as in verbally and/or cognitively impaired people or in patients who are sedated or mechanically ventilated - is based on behavioral observations by trained professionals.[3] However, all known observation procedures (e.g., Zurich Observation Pain Assessment[4] (ZOPA)); Pain Assessment in Advanced Dementia Scale (PAINAD) require a great deal of specialist expertise. These procedures can be made more difficult by perception- and interpretation-related misjudgments on the part of the observer. With regard to the differences in design, methodology, evaluation sample, and conceptualization of the phenomenon of pain, it is difficult to compare the quality criteria of the various tools. Even if trained personnel could theoretically record pain intensity several times a day using observation instruments, it would not be possible to measure it every minute or second. In this respect, the goal of automated pain recognition is to use valid, robust pain response patterns that can be recorded multimodally for a temporally dynamic, high-resolution, automated pain intensity recognition system.[citation needed]

Procedure

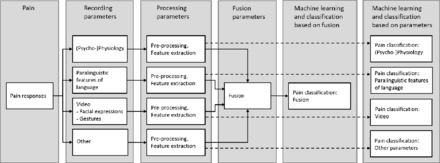

For automated pain recognition, pain-relevant parameters are usually recorded using non-invasive sensor technology, which captures data on the (physical) responses of the person in pain. This can be achieved with camera technology that captures facial expressions, gestures, or posture, while audio sensors record paralinguistic features. (Psycho-)physiological information such as muscle tone and heart rate can be collected via biopotential sensors (electrodes).[5]

Pain recognition requires the extraction of meaningful characteristics or patterns from the data collected. This is achieved using machine learning techniques that are able to provide an assessment of the pain after training (learning), e.g., "no pain," "mild pain," or "severe pain."[citation needed]

Parameters

Although the phenomenon of pain comprises different components (sensory discriminative, affective (emotional), cognitive, vegetative, and (psycho-)motor),[6] automated pain recognition currently relies on the measurable parameters of pain responses. These can be divided roughly into the two main categories of "physiological responses" and "behavioral responses".

Physiological responses

In humans, pain almost always initiates autonomic nervous processes that are reflected measurably in various physiological signals.[7]

Physiological signals

Measurements can include electrodermal activity (EDA, also skin conductance), electromyography (EMG), electrocardiogram (ECG), blood volume pulse (BVP), electroencephalogram (EEG), respiration, and body temperature,[8][9] which are regulatory mechanisms of the sympathetic and parasympathetic systems. Physiological signals are mainly recorded using special non-invasive surface electrodes (for EDA, EMG, ECG, and EEG), a blood volume pulse sensor (BVP), a respiratory belt (respiration), and a thermal sensor (body temperature). Endocrinological and immunological parameters can also be recorded, but this requires measures that are somewhat invasive (e.g., blood sampling).[citation needed]

Behavioral responses

Behavioral responses to pain fulfil two functions: protection of the body (e.g., through protective reflexes) and external communication of the pain (e.g., as a cry for help). The responses are particularly evident in facial expressions, gestures, and paralinguistic features.

Facial expressions

Behavioral signals captured comprise facial expression patterns (expressive behavior), which are measured with the aid of video signals. Facial expression recognition is based on the everyday clinical observation that pain often manifests itself in the patient's facial expressions but that this is not necessarily always the case, since facial expressions can be inhibited through self-control. Despite the possibility that facial expressions may be influenced consciously, facial expression behavior represents an essential source of information for pain diagnosis and is thus also a source of information for automatic pain recognition. One advantage of video-based facial expression recognition is the contact-free measurement of the face, provided that it can be captured on video, which is not possible in every position (e.g., lying face down) or may be limited by bandages covering the face. Facial expression analysis relies on rapid, spontaneous, and temporary changes in neuromuscular activity that lead to visually detectable changes in the face.[citation needed]

Gestures

Gestures are also captured predominantly using non-contact camera technology. Motor pain responses vary and are strongly dependent on the type and cause of the pain. They range from abrupt protective reflexes (e.g., spontaneous retraction of extremities or doubling up) to agitation (pathological restlessness) and avoidance behavior (hesitant, cautious movements).

Paralinguistic features of language

Among other things, pain leads to nonverbal linguistic behavior that manifests itself in sounds such as sighing, gasping, moaning, whining, etc. Paralinguistic features are usually recorded using highly sensitive microphones.

Algorithms

After the recording, pre-processing (e.g., filtering), and extraction of relevant features, an optional information fusion can be performed. During this process, modalities from different signal sources are merged to generate new or more precise knowledge.[citation needed]

The pain is classified using machine learning processes. The method chosen has a significant influence on the recognition rate and depends greatly on the quality and granularity of the underlying data. Similar to the field of affective computing,[10] the following classifiers are currently being used:

Support Vector Machine (SVM): The goal of an SVM is to find a clearly defined optimal hyperplane with the greatest minimal distance to two (or more) classes to be separated. The hyperplane acts as a decision function for classifying an unknown pattern.

Random Forest (RF): RF is based on the composition of random, uncorrelated decision trees. An unknown pattern is judged individually by each tree and assigned to a class. The final classification of the patterns by the RF is then based on a majority decision.

k-Nearest Neighbors (k-NN): The k-NN algorithm classifies an unknown object using the class label that most commonly classifies the k neighbors closest to it. Its neighbors are determined using a selected similarity measure (e.g., Euclidean distance, Jaccard coefficient, etc.).

Artificial neural networks (ANNs): ANNs are inspired by biological neural networks and model their organizational principles and processes in a very simplified manner. Class patterns are learned by adjusting the weights of the individual neuronal connections.

Databases

In order to classify pain in a valid manner, it is necessary to create representative, reliable, and valid pain databases that are available to the machine learner for training. An ideal database would be sufficiently large and would consist of natural (not experimental), high-quality pain responses. However, natural responses are difficult to record and can only be obtained to a limited extent; in most cases they are characterized by suboptimal quality. The databases currently available therefore contain experimental or quasi-experimental pain responses, and each database is based on a different pain model. The following list shows a selection of the most relevant pain databases (last updated: April 2020):[11]

- UNBC-McMaster Shoulder Pain

- BioVid Heat Pain

- EmoPain

- SenseEmotion

- X-ITE Pain

References

- ↑ GmbH, Südwest Presse Online-Dienste (2017-04-11). "Forschung: Schmerzen messbar machen" (in de). https://www.swp.de/suedwesten/staedte/ulm/schmerzen-messbar-machen-23426123.html.

- ↑ "Künstliche Intelligenz erkennt den Schmerz" (in de). 2 March 2017. https://www.aerztezeitung.de/Medizin/Kuenstliche-Intelligenz-erkennt-den-Schmerz-296050.html.

- ↑ Fundamentals of pain medicine. Cheng, Jianguo (Professor of anesthesiology),, Rosenquist, Richard W.. Cham, Switzerland. 8 February 2018. ISBN 978-3-319-64922-1. OCLC 1023425599.

- ↑ Elisabeth Handel: Praxishandbuch ZOPA: Schmerzeinschätzung bei Patienten mit kognitiven und/oder Bewusstseinsbeeinträchtigungen. Huber, Bern 2010, ISBN:978-3-456-84785-6.

- ↑ Anbarjafari, Gholamreza (2018). Machine learning for face, emotion, and pain recognition. Gorbova, Jelena,, Hammer, Rain Eric,, Rasti, Pejman,, Noroozi, Fatemeh,, Society of Photo-optical Instrumentation Engineers. Bellingham, Washington. ISBN 978-1-5106-1986-9. OCLC 1035460960.

- ↑ Henrik Kessler (2015) (in German), Kurzlehrbuch Medizinische Psychologie und Soziologie (3 ed.), Stuttgart/New York: Thieme, p. 34, ISBN 978-3-13-136423-4

- ↑ Birbaumer, Niels. (2006). Biologische Psychologie : mit 41 Tabellen : [Bonusmaterial im Web]. Schmidt, Robert F. (6., vollst. überarb. und erg. Aufl ed.). Heidelberg: Springer. ISBN 978-3-540-25460-7. OCLC 162267511.

- ↑ S. Gruss et al.: Pain Intensity Recognition Rates via Biopotential Feature Patterns with Support Vector Machines. In: PLoS One. Vol. 10, No. 10, 2015, S. 1–14, doi:10.1371/journal.pone.0140330.

- ↑ S. Walter et al.: Automatic pain quantification using autonomic parameters. In: Psychol. Neurosci. Nol. 7, No. 3, 2014, S. 363–380, doi:10.3922/j.psns.2014.041.

- ↑ Picard, Rosalind W. (2000). Affective computing (1st MIT Press pbk. ed.). Cambridge, Mass.: MIT Press. ISBN 0-262-66115-2. OCLC 45432790.

- ↑ Werner, Philipp; Lopez-Martinez, Daniel; Walter, Steffen; Al-Hamadi, Ayoub; Gruss, Sascha; Picard, Rosalind (2019). "Automatic Recognition Methods Supporting Pain Assessment: A Survey". IEEE Transactions on Affective Computing 13: 530–552. doi:10.1109/TAFFC.2019.2946774. ISSN 1949-3045.

External links

|