Skellam distribution

|

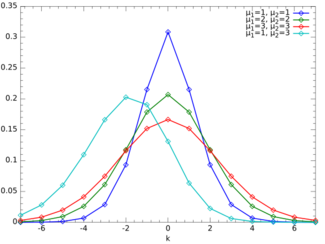

Probability mass function  Examples of the probability mass function for the Skellam distribution. The horizontal axis is the index k. (The function is only defined at integer values of k. The connecting lines do not indicate continuity.) | |||

| Parameters | [math]\displaystyle{ \mu_1\ge 0,~~\mu_2\ge 0 }[/math] | ||

|---|---|---|---|

| Support | [math]\displaystyle{ k \in \{\ldots, -2,-1,0,1,2,\ldots\} }[/math] | ||

| pmf | [math]\displaystyle{ e^{-(\mu_1\!+\!\mu_2)} \left(\frac{\mu_1}{\mu_2}\right)^{k/2}\!\!I_{k}(2\sqrt{\mu_1\mu_2}) }[/math] | ||

| Mean | [math]\displaystyle{ \mu_1-\mu_2\, }[/math] | ||

| Median | N/A | ||

| Variance | [math]\displaystyle{ \mu_1+\mu_2\, }[/math] | ||

| Skewness | [math]\displaystyle{ \frac{\mu_1-\mu_2}{(\mu_1+\mu_2)^{3/2}} }[/math] | ||

| Kurtosis | [math]\displaystyle{ \frac{1}{\mu_1+\mu_2} }[/math] | ||

| MGF | [math]\displaystyle{ e^{-(\mu_1+\mu_2)+\mu_1e^t+\mu_2e^{-t}} }[/math] | ||

| CF | [math]\displaystyle{ e^{-(\mu_1+\mu_2)+\mu_1e^{it}+\mu_2e^{-it}} }[/math] | ||

The Skellam distribution is the discrete probability distribution of the difference [math]\displaystyle{ N_1-N_2 }[/math] of two statistically independent random variables [math]\displaystyle{ N_1 }[/math] and [math]\displaystyle{ N_2, }[/math] each Poisson-distributed with respective expected values [math]\displaystyle{ \mu_1 }[/math] and [math]\displaystyle{ \mu_2 }[/math]. It is useful in describing the statistics of the difference of two images with simple photon noise, as well as describing the point spread distribution in sports where all scored points are equal, such as baseball, hockey and soccer.

The distribution is also applicable to a special case of the difference of dependent Poisson random variables, but just the obvious case where the two variables have a common additive random contribution which is cancelled by the differencing: see Karlis & Ntzoufras (2003) for details and an application.

The probability mass function for the Skellam distribution for a difference [math]\displaystyle{ K=N_1-N_2 }[/math] between two independent Poisson-distributed random variables with means [math]\displaystyle{ \mu_1 }[/math] and [math]\displaystyle{ \mu_2 }[/math] is given by:

- [math]\displaystyle{ p(k;\mu_1,\mu_2) = \Pr\{K=k\} = e^{-(\mu_1+\mu_2)} \left({\mu_1\over\mu_2}\right)^{k/2}I_{k}(2\sqrt{\mu_1\mu_2}) }[/math]

where Ik(z) is the modified Bessel function of the first kind. Since k is an integer we have that Ik(z)=I|k|(z).

Derivation

The probability mass function of a Poisson-distributed random variable with mean μ is given by

- [math]\displaystyle{ p(k;\mu)={\mu^k\over k!}e^{-\mu}.\, }[/math]

for [math]\displaystyle{ k \ge 0 }[/math] (and zero otherwise). The Skellam probability mass function for the difference of two independent counts [math]\displaystyle{ K=N_1-N_2 }[/math] is the convolution of two Poisson distributions: (Skellam, 1946)

- [math]\displaystyle{ \begin{align} p(k;\mu_1,\mu_2) & =\sum_{n=-\infty}^\infty p(k+n;\mu_1)p(n;\mu_2) \\ & =e^{-(\mu_1+\mu_2)}\sum_{n=\max(0,-k)}^\infty \end{align} }[/math]

Since the Poisson distribution is zero for negative values of the count [math]\displaystyle{ (p(N\lt 0;\mu)=0) }[/math], the second sum is only taken for those terms where [math]\displaystyle{ n\ge0 }[/math] and [math]\displaystyle{ n+k\ge0 }[/math]. It can be shown that the above sum implies that

- [math]\displaystyle{ \frac{p(k;\mu_1,\mu_2)}{p(-k;\mu_1,\mu_2)}=\left(\frac{\mu_1}{\mu_2}\right)^k }[/math]

so that:

- [math]\displaystyle{ p(k;\mu_1,\mu_2)= e^{-(\mu_1+\mu_2)} \left({\mu_1\over\mu_2}\right)^{k/2}I_{|k|}(2\sqrt{\mu_1\mu_2}) }[/math]

where I k(z) is the modified Bessel function of the first kind. The special case for [math]\displaystyle{ \mu_1=\mu_2(=\mu) }[/math] is given by Irwin (1937):

- [math]\displaystyle{ p\left(k;\mu,\mu\right) = e^{-2\mu}I_{|k|}(2\mu). }[/math]

Using the limiting values of the modified Bessel function for small arguments, we can recover the Poisson distribution as a special case of the Skellam distribution for [math]\displaystyle{ \mu_2=0 }[/math].

Properties

As it is a discrete probability function, the Skellam probability mass function is normalized:

- [math]\displaystyle{ \sum_{k=-\infty}^\infty p(k;\mu_1,\mu_2)=1. }[/math]

We know that the probability generating function (pgf) for a Poisson distribution is:

- [math]\displaystyle{ G\left(t;\mu\right)= e^{\mu(t-1)}. }[/math]

It follows that the pgf, [math]\displaystyle{ G(t;\mu_1,\mu_2) }[/math], for a Skellam probability mass function will be:

- [math]\displaystyle{ \begin{align} G(t;\mu_1,\mu_2) & = \sum_{k=-\infty}^\infty p(k;\mu_1,\mu_2)t^k \\[4pt] & = G\left(t;\mu_1\right)G\left(1/t;\mu_2\right) \\[4pt] & = e^{-(\mu_1+\mu_2)+\mu_1 t+\mu_2/t}. \end{align} }[/math]

Notice that the form of the probability-generating function implies that the distribution of the sums or the differences of any number of independent Skellam-distributed variables are again Skellam-distributed. It is sometimes claimed that any linear combination of two Skellam distributed variables are again Skellam-distributed, but this is clearly not true since any multiplier other than [math]\displaystyle{ \pm 1 }[/math] would change the support of the distribution and alter the pattern of moments in a way that no Skellam distribution can satisfy.

The moment-generating function is given by:

- [math]\displaystyle{ M\left(t;\mu_1,\mu_2\right) = G(e^t;\mu_1,\mu_2) = \sum_{k=0}^\infty { t^k \over k!}\,m_k }[/math]

which yields the raw moments mk . Define:

- [math]\displaystyle{ \Delta\ \stackrel{\mathrm{def}}{=}\ \mu_1-\mu_2\, }[/math]

- [math]\displaystyle{ \mu\ \stackrel{\mathrm{def}}{=}\ (\mu_1+\mu_2)/2.\, }[/math]

Then the raw moments mk are

- [math]\displaystyle{ m_1=\left.\Delta\right.\, }[/math]

- [math]\displaystyle{ m_2=\left.2\mu+\Delta^2\right.\, }[/math]

- [math]\displaystyle{ m_3=\left.\Delta(1+6\mu+\Delta^2)\right.\, }[/math]

The central moments M k are

- [math]\displaystyle{ M_2=\left.2\mu\right.,\, }[/math]

- [math]\displaystyle{ M_3=\left.\Delta\right.,\, }[/math]

- [math]\displaystyle{ M_4=\left.2\mu+12\mu^2\right..\, }[/math]

The mean, variance, skewness, and kurtosis excess are respectively:

- [math]\displaystyle{ \begin{align} \operatorname E(n) & = \Delta, \\[4pt] \sigma^2 & =2\mu, \\[4pt] \gamma_1 & =\Delta/(2\mu)^{3/2}, \\[4pt] \gamma_2 & = 1/2. \end{align} }[/math]

The cumulant-generating function is given by:

- [math]\displaystyle{ K(t;\mu_1,\mu_2)\ \stackrel{\mathrm{def}}{=}\ \ln(M(t;\mu_1,\mu_2)) = \sum_{k=0}^\infty { t^k \over k!}\,\kappa_k }[/math]

which yields the cumulants:

- [math]\displaystyle{ \kappa_{2k}=\left.2\mu\right. }[/math]

- [math]\displaystyle{ \kappa_{2k+1}=\left.\Delta\right. . }[/math]

For the special case when μ1 = μ2, an asymptotic expansion of the modified Bessel function of the first kind yields for large μ:

- [math]\displaystyle{ p(k;\mu,\mu)\sim {1\over\sqrt{4\pi\mu}}\left[1+\sum_{n=1}^\infty (-1)^n{\{4k^2-1^2\}\{4k^2-3^2\}\cdots\{4k^2-(2n-1)^2\} \over n!\,2^{3n}\,(2\mu)^n}\right]. }[/math]

(Abramowitz & Stegun 1972, p. 377). Also, for this special case, when k is also large, and of order of the square root of 2μ, the distribution tends to a normal distribution:

- [math]\displaystyle{ p(k;\mu,\mu)\sim {e^{-k^2/4\mu}\over\sqrt{4\pi\mu}}. }[/math]

These special results can easily be extended to the more general case of different means.

Bounds on weight above zero

If [math]\displaystyle{ X \sim \operatorname{Skellam} (\mu_1, \mu_2) }[/math], with [math]\displaystyle{ \mu_1 \lt \mu_2 }[/math], then

- [math]\displaystyle{ \frac{\exp(-(\sqrt{\mu_1} -\sqrt{\mu_2})^2 )}{(\mu_1 + \mu_2)^2} - \frac{e^{-(\mu_1 + \mu_2)}}{2\sqrt{\mu_1 \mu_2}} - \frac{e^{-(\mu_1 + \mu_2)}}{4\mu_1 \mu_2} \leq \Pr\{X \geq 0\} \leq \exp (- (\sqrt{\mu_1} -\sqrt{\mu_2})^2) }[/math]

Details can be found in Poisson distribution

References

- Abramowitz, Milton; Stegun, Irene A., eds (June 1965). Handbook of mathematical functions with formulas, graphs, and mathematical tables (Unabridged and unaltered republ. [der Ausg.] 1964, 5. Dover printing ed.). Dover Publications. pp. 374–378. ISBN 0486612724. http://store.doverpublications.com/0486612724.html. Retrieved 27 September 2012.

- Irwin, J. O. (1937) "The frequency distribution of the difference between two independent variates following the same Poisson distribution." Journal of the Royal Statistical Society: Series A, 100 (3), 415–416. JSTOR 2980526

- Karlis, D. and Ntzoufras, I. (2003) "Analysis of sports data using bivariate Poisson models". Journal of the Royal Statistical Society, Series D, 52 (3), 381–393. doi:10.1111/1467-9884.00366

- Karlis D. and Ntzoufras I. (2006). Bayesian analysis of the differences of count data. Statistics in Medicine, 25, 1885–1905. [1]

- Skellam, J. G. (1946) "The frequency distribution of the difference between two Poisson variates belonging to different populations". Journal of the Royal Statistical Society, Series A, 109 (3), 296. JSTOR 2981372

See also

|