Glossary of machine vision

This article needs additional citations for verification. (December 2011) (Learn how and when to remove this template message) |

The following are common definitions related to the machine vision field.

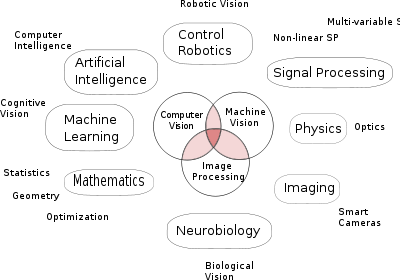

General related fields

0-9

- 1394. FireWire is Apple Inc.'s brand name for the IEEE 1394 interface. It is also known as i.Link (Sony's name) or IEEE 1394 (although the 1394 standard also defines a backplane interface). It is a personal computer (and digital audio/digital video) serial bus interface standard, offering high-speed communications and isochronous real-time data services.

- 1D. One-dimensional.

- 2D computer graphics. The computer-based generation of digital images—mostly from two-dimensional models (such as 2D geometric models, text, and digital images) and by techniques specific to them.

- 3D computer graphics. 3D computer graphics are different from 2D computer graphics in that a three-dimensional representation of geometric data is stored in the computer for the purposes of performing calculations and rendering 2D images. Such images may be for later display or for real-time viewing. Despite these differences, 3D computer graphics rely on many of the same algorithms as 2D computer vector graphics in the wire frame model and 2D computer raster graphics in the final rendered display. In computer graphics software, the distinction between 2D and 3D is occasionally blurred; 2D applications may use 3D techniques to achieve effects such as lighting, and primarily 3D may use 2D rendering techniques.

- 3D scanner. This is a device that analyzes a real-world object or environment to collect data on its shape and possibly color. The collected data can then be used to construct digital, three dimensional models useful for a wide variety of applications.

A

- Aberration. Optically, defocus refers to a translation along the optical axis away from the plane or surface of best focus. In general, defocus reduces the sharpness and contrast of the image. What should be sharp, high-contrast edges in a scene become gradual transitions.

- Algebraic distance or algebraic error. The algebraic distance from a point xi to a curve or surface defined by [math]\displaystyle{ f(x,\beta)=0 }[/math] is the value of [math]\displaystyle{ f(x_i,\beta) }[/math], i.e. the residual in the least squares problem with data point (xi, 0) and model function f. This term is mainly used in computer vision.[1][1][2]

- Aperture. In context of photography or machine vision, aperture refers to the diameter of the aperture stop of a photographic lens. The aperture stop can be adjusted to control the amount of light reaching the film or image sensor.

- aspect ratio (image). The aspect ratio of an image is its displayed width divided by its height (usually expressed as "x:y").

- Angular resolution. Describes the resolving power of any image forming device such as an optical or radio telescope, a microscope, a camera, or an eye.

- Automated optical inspection.

B

- Barcode. A barcode (also bar code) is a machine-readable representation of information in a visual format on a surface.

- Blob discovery. Inspecting an image for discrete blobs of connected pixels (e.g. a black hole in a grey object) as image landmarks. These blobs frequently represent optical targets for machining, robotic capture, or manufacturing failure.

- Bitmap. A raster graphics image, digital image, or bitmap, is a data file or structure representing a generally rectangular grid of pixels, or points of color, on a computer monitor, paper, or other display device.

C

- Camera. A camera is a device used to take pictures, either singly or in sequence. A camera that takes pictures singly is sometimes called a photo camera to distinguish it from a video camera.

- Camera Link. Camera Link is a serial communication protocol designed for computer vision applications based on the National Semiconductor interface Channel-link. It was designed for the purpose of standardizing scientific and industrial video products including cameras, cables and frame grabbers. The standard is maintained and administered by the Automated Imaging Association, or AIA, the global machine vision industry's trade group.

- Charge-coupled device. A charge-coupled device (CCD) is a sensor for recording images, consisting of an integrated circuit containing an array of linked, or coupled, capacitors. CCD sensors and cameras tend to be more sensitive, less noisy, and more expensive than CMOS sensors and cameras.

- CIE 1931 Color Space. In the study of the perception of color, one of the first mathematically defined color spaces was the CIE XYZ color space (also known as CIE 1931 color space), created by the International Commission on Illumination (CIE) in 1931.

- CMOS. CMOS ("see-moss")stands for complementary metal-oxide semiconductor, is a major class of integrated circuits. CMOS imaging sensors for machine vision are cheaper than CCD sensors but more noisy.

- CoaXPress. CoaXPress (CXP) is an asymmetric high speed serial communication standard over coaxial cable. CoaXPress combines high speed image data, low speed camera control and power over a single coaxial cable. The standard is maintained by JIIA, the Japan Industrial Imaging Association.

- Color. The perception of the frequency (or wavelength) of light, and can be compared to how pitch (or a musical note) is the perception of the frequency or wavelength of sound.

- Color blindness. Also known as color vision deficiency, in humans is the inability to perceive differences between some or all colors that other people can distinguish

- Color temperature. "White light" is commonly described by its color temperature. A traditional incandescent light source's color temperature is determined by comparing its hue with a theoretical, heated black-body radiator. The lamp's color temperature is the temperature in kelvins at which the heated black-body radiator matches the hue of the lamp.

- Color vision. CV is the capacity of an organism or machine to distinguish objects based on the wavelengths (or frequencies) of the light they reflect or emit.

- computer vision. The study and application of methods which allow computers to "understand" image content.

- Contrast. In visual perception, contrast is the difference in visual properties that makes an object (or its representation in an image) distinguishable from other objects and the background.

- C-Mount. Standardized adapter for optical lenses on CCD - cameras. C-Mount lenses have a back focal distance 17.5 mm vs. 12.5 mm for "CS-mount" lenses. A C-Mount lens can be used on a CS-Mount camera through the use of a 5 mm extension adapter. C-mount is a 1" diameter, 32 threads per inch mounting thread (1"-32UN-2A.)

- CS-Mount. Same as C-Mount but the focal point is 5 mm shorter. A CS-Mount lens will not work on a C-Mount camera. CS-mount is a 1" diameter, 32 threads per inch mounting thread.

D

- Data matrix. A two dimensional Barcode.

- Depth of field. In optics, particularly photography and machine vision, the depth of field (DOF) is the distance in front of and behind the subject which appears to be in focus.

- Depth perception. DP is the visual ability to perceive the world in three dimensions. It is a trait common to many higher animals. Depth perception allows the beholder to accurately gauge the distance to an object.

- Diaphragm. In optics, a diaphragm is a thin opaque structure with an opening (aperture) at its centre. The role of the diaphragm is to stop the passage of light, except for the light passing through the aperture.

E

- Edge detection. ED marks the points in a digital image at which the luminous intensity changes sharply. It also marks the points of luminous intensity changes of an object or spatial-taxon silhouette.

- Electromagnetic interference. Radio Frequency Interference (RFI) is electromagnetic radiation which is emitted by electrical circuits carrying rapidly changing signals, as a by-product of their normal operation, and which causes unwanted signals (interference or noise) to be induced in other circuits.

F

- FireWire. FireWire (also known as i. Link or IEEE 1394) is a personal computer (and digital audio/video) serial bus interface standard, offering high-speed communications. It is often used as an interface for industrial cameras.

- Fixed-pattern noise.

- Flat-field correction.

- Frame grabber. An electronic device that captures individual, digital still frames from an analog video signal or a digital video stream.

- Fringe Projection Technique. 3D data acquisition technique employing projector displaying fringe pattern on a surface of measured piece, and one or more cameras recording image(s).

- Field of view. The field of view (FOV) is the part which can be seen by the machine vision system at one moment. The field of view depends from the lens of the system and from the working distance between object and camera.

- Focus. An image, or image point or region, is said to be in focus if light from object points is converged about as well as possible in the image; conversely, it is out of focus if light is not well converged. The border between these conditions is sometimes defined via a circle of confusion criterion.

G

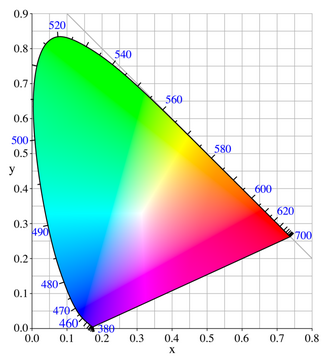

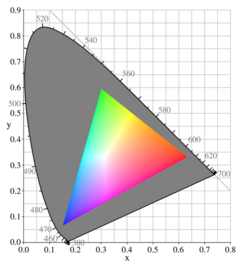

The grayed-out horseshoe shape is the entire range of possible chromaticities. The colored triangle is the gamut available to a typical computer monitor; it does not cover the entire space.

- Gamut. In color reproduction, including computer graphics and photography, the gamut, or color gamut /ˈɡæmət/, is a certain complete subset of colors.

- Grayscale. A grayscale digital image is an image in which the value of each pixel is a single sample. Displayed images of this sort are typically composed of shades of gray, varying from black at the weakest intensity to white at the strongest, though in principle the samples could be displayed as shades of any color, or even coded with various colors for different intensities.

- GUI. A graphical user interface (or GUI, sometimes pronounced "gooey") is a method of interacting with a computer through a metaphor of direct manipulation of graphical images and widgets in addition to text.

H

- Histogram. In statistics, a histogram is a graphical display of tabulated frequencies. A histogram is the graphical version of a table which shows what proportion of cases fall into each of several or many specified categories. The histogram differs from a bar chart in that it is the area of the bar that denotes the value, not the height, a crucial distinction when the categories are not of uniform width (Lancaster, 1974). The categories are usually specified as non-overlapping intervals of some variable. The categories (bars) must be adjacent.

- Histogram (Color). In computer graphics and photography, a color histogram is a representation of the distribution of colors in an image, derived by counting the number of pixels of each of given set of color ranges in a typically two-dimensional (2D) or three-dimensional (3D) color space. A histogram is a standard statistical description of a distribution in terms of occurrence frequencies of different event classes; for color, the event classes are regions in color space.

- HSV color space. The HSV (Hue, Saturation, Value) model, also called HSB (Hue, Saturation, Brightness), defines a color space in terms of three constituent components:

- Hue, the color type (such as red, blue, or yellow)

- Saturation, the "vibrancy" of the color and colorimetric purity

- Value, the brightness of the color

I

- Image file formats. Image file formats provide a standardized method of organizing and storing image data. This article deals with digital image formats used to store photographic and other image information. Image files are made up of either pixel or vector (geometric) data, which is rasterized to pixels in the display process, with a few exceptions in vector graphic display. The pixels that make up an image are in the form of a grid of columns and rows. Each of the pixels in an image stores digital numbers representing brightness and color.

- Image segmentation.

- Infrared imaging. See Thermographic camera.

- Incandescent light bulb. An incandescent light bulb generates light using a glowing filament heated to white-hot by an electric current.

J

- JPEG. JPEG (pronounced jay-peg) is a most commonly used standard method of lossy compression for photographic images.

K

- Kell factor. It is a parameter used to determine the effective resolution of a discrete display device.

L

- Laser. In physics, a laser is a device that emits light through a specific mechanism for which the term laser is an acronym: light amplification by stimulated emission of radiation.

- Lens. A lens is a device that causes light to either converge and concentrate or to diverge, usually formed from a piece of shaped glass. Lenses may be combined to form more complex optical systems as a Normal lens or a Telephoto lens.

- Lens Controller. A lens controller is a device used to control a motorized (ZFI) lens. Lens controllers may be internal to a camera, a set of switches used manually, or a sophisticated device that allows control of a lens with a computer.

- Lighting. Lighting refers to either artificial light sources such as lamps or to natural illumination.

M

- Metrology. Metrology is the science of measurement. There are many applications for machine vision in metrology.

- machine vision. MV is the application of computer vision to industry and manufacturing.

- Motion perception. MP is the process of inferring the speed and direction of objects and surfaces that move in a visual scene given some visual input.

N

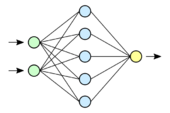

- Neural network. A NN is an interconnected group of artificial neurons that uses a mathematical or computational model for information processing based on a connectionist approach to computation. In most cases an ANN is an adaptive system that changes its structure based on external or internal information that flows through the network.

- Normal lens. In machine vision a normal or entrocentric lens is a lens that generates images that are generally held to have a "natural" perspective compared with lenses with longer or shorter focal lengths. Lenses of shorter focal length are called wide-angle lenses, while longer focal length lenses are called telephoto lenses.

O

- Optical character recognition. Usually abbreviated to OCR, involves computer software designed to translate images of typewritten text (usually captured by a scanner) into machine-editable text, or to translate pictures of characters into a standard encoding scheme representing them in (ASCII or Unicode).

- Optical resolution. Describes the ability of a system to distinguish, detect, and/or record physical details by electromagnetic means. The system may be imaging (e.g., a camera) or non-imaging (e.g., a quad-cell laser detector).

- Optical transfer function.

P

- Pattern recognition. This is a field within the area of machine learning. Alternatively, it can be defined as the act of taking in raw data and taking an action based on the category of the data. It is a collection of methods for supervised learning.

- Pixel. A pixel is one of the many tiny dots that make up the representation of a picture in a computer's memory or screen.

- Pixelation. In computer graphics, pixelation is an effect caused by displaying a bitmap or a section of a bitmap at such a large size that individual pixels, small single-colored square display elements that comprise the bitmap, are visible.

- Prime lens. Mechanical assembly of lenses whose focal length is fixed, as opposed to a zoom lens, which has a variable focal length.

Q

- Q-Factor (Optics). In optics, the Q factor of a resonant cavity is given by

- [math]\displaystyle{ Q = \frac{2\pi f_o \mathcal{E}}{P} }[/math],

where [math]\displaystyle{ f_o }[/math] is the resonant frequency, [math]\displaystyle{ \mathcal{E} }[/math] is the stored energy in the cavity, and [math]\displaystyle{ P=-\frac{dE}{dt} }[/math] is the power dissipated. The optical Q is equal to the ratio of the resonant frequency to the bandwidth of the cavity resonance. The average lifetime of a resonant photon in the cavity is proportional to the cavity's Q. If the Q factor of a laser's cavity is abruptly changed from a low value to a high one, the laser will emit a pulse of light that is much more intense than the laser's normal continuous output. This technique is known as Q-switching.

R

- Region of interest. A Region of Interest, often abbreviated ROI, is a selected subset of samples within a dataset identified for a particular purpose.

- RGB. The RGB color model utilizes the additive model in which red, green, and blue light are combined in various ways to create other colors.

- ROI. See Region of Interest.

- Foreground, figure and objects. See also spatial-taxon.

S

- S-video. Separate video, abbreviated S-Video and also known as Y/C (or erroneously, S-VHS and "super video") is an analog video signal that carries the video data as two separate signals (brightness and color), unlike composite video which carries the entire set of signals in one signal line. S-Video, as most commonly implemented, carries high-bandwidth 480i or 576i resolution video, i.e. standard-definition video. It does not carry audio on the same cable.

- Scheimpflug principle.

- Shutter. A shutter is a device that allows light to pass for a determined period of time, for the purpose of exposing the image sensor to the right amount of light to create a permanent image of a view.

- Shutter speed. In machine vision the shutter speed is the time for which the shutter is held open during the taking an image to allow light to reach the imaging sensor. In combination with variation of the lens aperture, this regulates how much light the imaging sensor in a digital camera will receive.

- Smart camera. A smart camera is an integrated machine vision system which, in addition to image capture circuitry, includes a processor, which can extract information from images without need for an external processing unit, and interface devices used to make results available to other devices.

- Spatial-Taxon. Spatial-taxons are information granules, composed of non-mutually exclusive pixel regions, within scene architecture. They are similar to the Gestalt psychological designation of figure-ground, but are extended to include foreground, object groups, objects and salient object parts.

- Structured-light 3D scanner. The process of projecting a known pattern of illumination (often grids or horizontal bars) on to a scene. The way that these patterns appear to deform when striking surfaces allows vision systems to calculate the depth and surface information of the objects in the scene.

- SVGA. Super Video Graphics Array, almost always abbreviated to Super VGA or just SVGA is a broad term that covers a wide range of computer display standards.

T

- Telecentric lens. Compound lens with an unusual property concerning its geometry of image-forming rays. In machine vision systems telecentric lenses are usually employed in order to achieve dimensional and geometric invariance of images within a range of different distances from the lens and across the whole field of view.

- Telephoto lens. Lens whose focal length is significantly longer than the focal length of a normal lens.

- Thermography. Thermal imaging, a type of Infrared imaging.

- TIFF. Tagged Image File Format (abbreviated TIFF) is a file format for mainly storing images, including photographs and line art.

U

- USB. Universal Serial Bus (USB) provides a serial bus standard for connecting devices, usually to computers such as PCs, but is also becoming commonplace on cameras.

V

- VESA. The Video Electronics Standards Association (VESA) is an international body, founded in the late 1980s by NEC Home Electronics and eight other video display adapter manufacturers. The initial goal was to produce a standard for 800×600 SVGA resolution video displays. Since then VESA has issued a number of standards, mostly relating to the function of video peripherals in IBM PC compatible computers.

- VGA. Video Graphics Array (VGA) is a computer display standard first marketed in 1987 by IBM.

- Vision processing unit. A class of microprocessors aimed at accelerating machine vision tasks.

W

- Wide-angle lens. In photography and cinematography, a wide-angle lens is a lens whose focal length is shorter than the focal length of a normal lens.

X

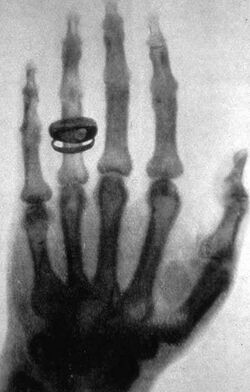

- X-rays. A form of electromagnetic radiation with a wavelength in the range of 10 to 0.01 nanometers, corresponding to frequencies in the range 30 to 3000 PHz (1015 hertz). X-rays are primarily used for diagnostic medical and industrial imaging as well as crystallography. X-rays are a form of ionizing radiation and as such can be dangerous.

Y

- Y-cable. A Y-cable or Y cable is an electrical cable containing three ends of which one is a common end that in turn leads to a split into the remaining two ends, resembling the letter "Y". Y-cables are typically, but not necessarily, short (less than 12 inches), and often the ends connect to other cables. Uses may be as simple as splitting one audio or video channel into two, to more complex uses such as splicing signals from a high density computer connector to its appropriate peripheral .

Z

- Zoom lens. A mechanical assembly of lenses whose focal length can be changed, as opposed to a prime lens, which has a fixed focal length. See an animation of the zoom principle below.

See also

- Glossary of artificial intelligence

- Frame grabber

- Google Goggles

- Machine vision glossary

- Morphological image processing

- OpenCV

- Smart camera

References

- ↑ Hartley, Richard I. (15 May 1998). "Minimizing algebraic error". Philosophical Transactions of the Royal Society of London. Series A: Mathematical, Physical and Engineering Sciences 356 (1740): 1175–1192. doi:10.1098/rsta.1998.0216. http://users.cecs.anu.edu.au/~hartley/Papers/algebraic/RoyalSociety/algebraic.pdf.

|