Exact differential equation

| Differential equations |

|---|

|

| Classification |

| Solution |

In mathematics, an exact differential equation or total differential equation is a certain kind of ordinary differential equation which is widely used in physics and engineering.

Definition

Given a simply connected and open subset D of [math]\displaystyle{ \mathbb{R}^2 }[/math] and two functions I and J which are continuous on D, an implicit first-order ordinary differential equation of the form

- [math]\displaystyle{ I(x, y)\, dx + J(x, y)\, dy = 0, }[/math]

is called an exact differential equation if there exists a continuously differentiable function F, called the potential function,[1][2] so that

- [math]\displaystyle{ \frac{\partial F}{\partial x} = I }[/math]

and

- [math]\displaystyle{ \frac{\partial F}{\partial y} = J. }[/math]

An exact equation may also be presented in the following form:

- [math]\displaystyle{ I(x, y) + J(x, y) \, y'(x) = 0 }[/math]

where the same constraints on I and J apply for the differential equation to be exact.

The nomenclature of "exact differential equation" refers to the exact differential of a function. For a function [math]\displaystyle{ F(x_0, x_1,...,x_{n-1},x_n) }[/math], the exact or total derivative with respect to [math]\displaystyle{ x_0 }[/math] is given by

- [math]\displaystyle{ \frac{dF}{dx_0}=\frac{\partial F}{\partial x_0}+\sum_{i=1}^{n}\frac{\partial F}{\partial x_i}\frac{dx_i}{dx_0}. }[/math]

Example

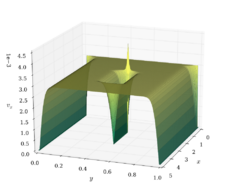

The function [math]\displaystyle{ F:\mathbb{R}^{2}\to\mathbb{R} }[/math] given by

- [math]\displaystyle{ F(x,y) = \frac{1}{2}(x^2 + y^2)+c }[/math]

is a potential function for the differential equation

- [math]\displaystyle{ x\,dx + y\,dy = 0.\, }[/math]

First order exact differential equations

Identifying first order exact differential equations

Let the functions [math]\displaystyle{ M }[/math], [math]\displaystyle{ N }[/math], [math]\displaystyle{ M_y }[/math], and [math]\displaystyle{ N_x }[/math], where the subscripts denote the partial derivative with respect to the relative variable, be continuous in the region [math]\displaystyle{ R: \alpha \lt x \lt \beta, \gamma \lt y \lt \delta }[/math]. Then the differential equation

[math]\displaystyle{ M(x, y) + N(x, y)\frac{dy}{dx} = 0 }[/math]

is exact if and only if

[math]\displaystyle{ M_y(x, y) = N_x(x, y) }[/math]

That is, there exists a function [math]\displaystyle{ \psi(x, y) }[/math], called a potential function, such that

[math]\displaystyle{ \psi _x(x, y) = M(x, y) \text{ and } \psi_y(x, y) = N(x, y) }[/math]

So, in general:

[math]\displaystyle{ M_y(x, y) = N_x(x, y) \iff \begin{cases} \exists \psi(x, y)\\ \psi_x(x, y) = M(x, y)\\ \psi_y(x, y) = N(x, y) \end{cases} }[/math]

Proof

The proof has two parts.

First, suppose there is a function [math]\displaystyle{ \psi(x,y) }[/math] such that [math]\displaystyle{ \psi_x(x, y) = M(x, y) \text{ and } \psi_y(x, y) = N(x, y) }[/math]

It then follows that [math]\displaystyle{ M_y(x, y) = \psi _{xy}(x, y) \text{ and } N_x(x, y) = \psi _{yx}(x, y) }[/math]

Since [math]\displaystyle{ M_y }[/math] and [math]\displaystyle{ N_x }[/math] are continuous, then [math]\displaystyle{ \psi _{xy} }[/math] and [math]\displaystyle{ \psi _{yx} }[/math] are also continuous which guarantees their equality.

The second part of the proof involves the construction of [math]\displaystyle{ \psi(x, y) }[/math] and can also be used as a procedure for solving first-order exact differential equations. Suppose that [math]\displaystyle{ M_y(x, y) = N_x(x, y) }[/math] and let there be a function [math]\displaystyle{ \psi(x, y) }[/math] for which [math]\displaystyle{ \psi _x(x, y) = M(x, y) \text{ and } \psi _y(x, y) = N(x, y) }[/math]

Begin by integrating the first equation with respect to [math]\displaystyle{ x }[/math]. In practice, it doesn't matter if you integrate the first or the second equation, so long as the integration is done with respect to the appropriate variable.

[math]\displaystyle{ \frac{\partial \psi}{\partial x}(x, y) = M(x, y) }[/math] [math]\displaystyle{ \psi(x, y) = \int{M(x, y) dx} + h(y) }[/math] [math]\displaystyle{ \psi(x, y) = Q(x, y) + h(y) }[/math]

where [math]\displaystyle{ Q(x, y) }[/math] is any differentiable function such that [math]\displaystyle{ Q_x = M }[/math]. The function [math]\displaystyle{ h(y) }[/math] plays the role of a constant of integration, but instead of just a constant, it is function of [math]\displaystyle{ y }[/math], since [math]\displaystyle{ M }[/math] is a function of both [math]\displaystyle{ x }[/math] and [math]\displaystyle{ y }[/math] and we are only integrating with respect to [math]\displaystyle{ x }[/math].

Now to show that it is always possible to find an [math]\displaystyle{ h(y) }[/math] such that [math]\displaystyle{ \psi _y = N }[/math]. [math]\displaystyle{ \psi(x, y) = Q(x, y) + h(y) }[/math]

Differentiate both sides with respect to [math]\displaystyle{ y }[/math]. [math]\displaystyle{ \frac{\partial \psi}{\partial y}(x, y) = \frac{\partial Q}{\partial y}(x, y) + h'(y) }[/math]

Set the result equal to [math]\displaystyle{ N }[/math] and solve for [math]\displaystyle{ h'(y) }[/math]. [math]\displaystyle{ h'(y) = N(x, y) - \frac{\partial Q}{\partial y}(x, y) }[/math]

In order to determine [math]\displaystyle{ h'(y) }[/math] from this equation, the right-hand side must depend only on [math]\displaystyle{ y }[/math]. This can be proven by showing that its derivative with respect to [math]\displaystyle{ x }[/math] is always zero, so differentiate the right-hand side with respect to [math]\displaystyle{ x }[/math]. [math]\displaystyle{ \frac{\partial N}{\partial x}(x, y) - \frac{\partial}{\partial x}\frac{\partial Q}{\partial y}(x, y) \iff \frac{\partial N}{\partial x}(x, y) - \frac{\partial}{\partial y}\frac{\partial Q}{\partial x}(x, y) }[/math]

Since [math]\displaystyle{ Q_x = M }[/math], [math]\displaystyle{ \frac{\partial N}{\partial x}(x, y) - \frac{\partial M}{\partial y}(x, y) }[/math] Now, this is zero based on our initial supposition that [math]\displaystyle{ M_y(x, y) = N_x(x, y) }[/math]

Therefore, [math]\displaystyle{ h'(y) = N(x, y) - \frac{\partial Q}{\partial y}(x, y) }[/math] [math]\displaystyle{ h(y) = \int{\left(N(x, y) - \frac{\partial Q}{\partial y}(x, y)\right) dy} }[/math]

[math]\displaystyle{ \psi(x, y) = Q(x, y) + \int{\left(N(x, y) - \frac{\partial Q}{\partial y}(x, y)\right) dy} + C }[/math]

And this completes the proof.

Solutions to first order exact differential equations

First order exact differential equations of the form [math]\displaystyle{ M(x, y) + N(x, y)\frac{dy}{dx} = 0 }[/math]

can be written in terms of the potential function [math]\displaystyle{ \psi(x, y) }[/math] [math]\displaystyle{ \frac{\partial \psi}{\partial x} + \frac{\partial \psi}{\partial y}\frac{dy}{dx} = 0 }[/math]

where [math]\displaystyle{ \begin{cases} \psi _x(x, y) = M(x, y)\\ \psi _y(x, y) = N(x, y) \end{cases} }[/math]

This is equivalent to taking the exact differential of [math]\displaystyle{ \psi(x,y) }[/math]. [math]\displaystyle{ \frac{\partial \psi}{\partial x} + \frac{\partial \psi}{\partial y}\frac{dy}{dx} = 0 \iff \frac{d}{dx}\psi(x, y(x)) = 0 }[/math]

The solutions to an exact differential equation are then given by [math]\displaystyle{ \psi(x, y(x)) = c }[/math]

and the problem reduces to finding [math]\displaystyle{ \psi(x, y) }[/math].

This can be done by integrating the two expressions [math]\displaystyle{ M(x, y) dx }[/math] and [math]\displaystyle{ N(x, y) dy }[/math] and then writing down each term in the resulting expressions only once and summing them up in order to get [math]\displaystyle{ \psi(x, y) }[/math].

The reasoning behind this is the following. Since [math]\displaystyle{ \begin{cases} \psi _x(x, y) = M(x, y)\\ \psi _y(x, y) = N(x, y) \end{cases} }[/math]

it follows, by integrating both sides, that [math]\displaystyle{ \begin{cases} \psi(x, y) = \int{M(x, y) dx} + h(y) = Q(x, y) + h(y)\\ \psi(x, y) = \int{N(x, y) dy} + g(x) = P(x, y) + g(x) \end{cases} }[/math]

Therefore, [math]\displaystyle{ Q(x, y) + h(y) = P(x, y) + g(x) }[/math]

where [math]\displaystyle{ Q(x, y) }[/math] and [math]\displaystyle{ P(x, y) }[/math] are differentiable functions such that [math]\displaystyle{ Q_x = M }[/math] and [math]\displaystyle{ P_y = N }[/math].

In order for this to be true and for both sides to result in the exact same expression, namely [math]\displaystyle{ \psi(x, y) }[/math], then [math]\displaystyle{ h(y) }[/math] must be contained within the expression for [math]\displaystyle{ P(x, y) }[/math] because it cannot be contained within [math]\displaystyle{ g(x) }[/math], since it is entirely a function of [math]\displaystyle{ y }[/math] and not [math]\displaystyle{ x }[/math] and is therefore not allowed to have anything to do with [math]\displaystyle{ x }[/math]. By analogy, [math]\displaystyle{ g(x) }[/math] must be contained within the expression [math]\displaystyle{ Q(x, y) }[/math].

Ergo, [math]\displaystyle{ Q(x, y) = g(x) + f(x, y) \text{ and } P(x, y) = h(y) + d(x, y) }[/math]

for some expressions [math]\displaystyle{ f(x, y) }[/math] and [math]\displaystyle{ d(x, y) }[/math]. Plugging in into the above equation, we find that [math]\displaystyle{ g(x) + f(x, y) + h(y) = h(y) + d(x, y) + g(x) \Rightarrow f(x, y) = d(x, y) }[/math] and so [math]\displaystyle{ f(x, y) }[/math] and [math]\displaystyle{ d(x, y) }[/math] turn out to be the same function. Therefore, [math]\displaystyle{ Q(x, y) = g(x) + f(x, y) \text { and } P(x, y) = h(y) + f(x, y) }[/math]

Since we already showed that [math]\displaystyle{ \begin{cases} \psi(x, y) = Q(x, y) + h(y)\\ \psi(x, y) = P(x, y) + g(x) \end{cases} }[/math]

it follows that [math]\displaystyle{ \psi(x, y) = g(x) + f(x, y) + h(y) }[/math]

So, we can construct [math]\displaystyle{ \psi(x, y) }[/math] by doing [math]\displaystyle{ \int{M(x,y) dx} }[/math] and [math]\displaystyle{ \int{N(x, y) dy} }[/math] and then taking the common terms we find within the two resulting expressions (that would be [math]\displaystyle{ f(x, y) }[/math] ) and then adding the terms which are uniquely found in either one of them - [math]\displaystyle{ g(x) }[/math] and [math]\displaystyle{ h(y) }[/math].

Second order exact differential equations

The concept of exact differential equations can be extended to second order equations.[3] Consider starting with the first-order exact equation:

- [math]\displaystyle{ I\left(x,y\right)+J\left(x,y\right){dy \over dx}=0 }[/math]

Since both functions [math]\displaystyle{ I\left(x,y\right) }[/math], [math]\displaystyle{ J\left(x,y\right) }[/math] are functions of two variables, implicitly differentiating the multivariate function yields

- [math]\displaystyle{ {dI \over dx} +\left({ dJ\over dx}\right){dy \over dx}+{d^2y \over dx^2}\left(J\left(x,y\right)\right)=0 }[/math]

Expanding the total derivatives gives that

- [math]\displaystyle{ {dI \over dx}={\partial I\over\partial x}+{\partial I\over\partial y}{dy \over dx} }[/math]

and that

- [math]\displaystyle{ {dJ \over dx}={\partial J\over\partial x}+{\partial J\over\partial y}{dy \over dx} }[/math]

Combining the [math]\displaystyle{ {dy \over dx} }[/math] terms gives

- [math]\displaystyle{ {\partial I\over\partial x}+{dy \over dx}\left({\partial I\over\partial y}+{\partial J\over\partial x}+{\partial J\over\partial y}{dy \over dx}\right)+{d^2y \over dx^2}\left(J\left(x,y\right)\right)=0 }[/math]

If the equation is exact, then [math]\displaystyle{ {\partial J\over\partial x}={\partial I\over\partial y} }[/math]. Additionally, the total derivative of [math]\displaystyle{ J\left(x,y\right) }[/math] is equal to its implicit ordinary derivative [math]\displaystyle{ {dJ \over dx} }[/math]. This leads to the rewritten equation

- [math]\displaystyle{ {\partial I\over\partial x}+{dy \over dx}\left({\partial J\over\partial x}+{dJ \over dx}\right)+{d^2y \over dx^2}\left(J\left(x,y\right)\right)=0 }[/math]

Now, let there be some second-order differential equation

- [math]\displaystyle{ f\left(x,y\right)+g\left(x,y,{dy \over dx}\right){dy \over dx}+{d^2y \over dx^2}\left(J\left(x,y\right)\right)=0 }[/math]

If [math]\displaystyle{ {\partial J\over\partial x}={\partial I\over\partial y} }[/math] for exact differential equations, then

- [math]\displaystyle{ \int \left({\partial I\over\partial y}\right)dy=\int \left({\partial J\over\partial x}\right)dy }[/math]

and

- [math]\displaystyle{ \int \left({\partial I\over\partial y}\right)dy=\int \left({\partial J\over\partial x}\right)dy=I\left(x,y\right)-h\left(x\right) }[/math]

where [math]\displaystyle{ h\left(x\right) }[/math] is some arbitrary function only of [math]\displaystyle{ x }[/math] that was differentiated away to zero upon taking the partial derivative of [math]\displaystyle{ I\left(x,y\right) }[/math] with respect to [math]\displaystyle{ y }[/math]. Although the sign on [math]\displaystyle{ h\left(x\right) }[/math] could be positive, it is more intuitive to think of the integral's result as [math]\displaystyle{ I\left(x,y\right) }[/math] that is missing some original extra function [math]\displaystyle{ h\left(x\right) }[/math] that was partially differentiated to zero.

Next, if

- [math]\displaystyle{ {dI\over dx}={\partial I\over\partial x}+{\partial I\over\partial y}{dy \over dx} }[/math]

then the term [math]\displaystyle{ {\partial I\over\partial x} }[/math] should be a function only of [math]\displaystyle{ x }[/math] and [math]\displaystyle{ y }[/math], since partial differentiation with respect to [math]\displaystyle{ x }[/math] will hold [math]\displaystyle{ y }[/math] constant and not produce any derivatives of [math]\displaystyle{ y }[/math]. In the second order equation

- [math]\displaystyle{ f\left(x,y\right)+g\left(x,y,{dy \over dx}\right){dy \over dx}+{d^2y \over dx^2}\left(J\left(x,y\right)\right)=0 }[/math]

only the term [math]\displaystyle{ f\left(x,y\right) }[/math] is a term purely of [math]\displaystyle{ x }[/math] and [math]\displaystyle{ y }[/math]. Let [math]\displaystyle{ {\partial I\over\partial x}=f\left(x,y\right) }[/math]. If [math]\displaystyle{ {\partial I\over\partial x}=f\left(x,y\right) }[/math], then

- [math]\displaystyle{ f\left(x,y\right)={ dI\over dx}-{\partial I\over\partial y}{dy \over dx} }[/math]

Since the total derivative of [math]\displaystyle{ I\left(x,y\right) }[/math] with respect to [math]\displaystyle{ x }[/math] is equivalent to the implicit ordinary derivative [math]\displaystyle{ {dI \over dx} }[/math] , then

- [math]\displaystyle{ f\left(x,y\right)+{\partial I\over\partial y}{dy \over dx}={dI \over dx}={d \over dx}\left(I\left(x,y\right)-h\left(x\right)\right)+{dh\left(x\right) \over dx} }[/math]

So,

- [math]\displaystyle{ {dh\left(x\right) \over dx}=f\left(x,y\right)+{\partial I\over\partial y}{dy \over dx}-{d \over dx}\left(I\left(x,y\right)-h\left(x\right)\right) }[/math]

and

- [math]\displaystyle{ h\left(x\right)=\int\left(f\left(x,y\right)+{\partial I\over\partial y}{dy \over dx}-{d \over dx}\left(I\left(x,y\right)-h\left(x\right)\right)\right)dx }[/math]

Thus, the second order differential equation

- [math]\displaystyle{ f\left(x,y\right)+g\left(x,y,{dy \over dx}\right){dy \over dx}+{d^2y \over dx^2}\left(J\left(x,y\right)\right)=0 }[/math]

is exact only if [math]\displaystyle{ g\left(x,y,{dy \over dx}\right)={ dJ\over dx}+{\partial J\over\partial x}={dJ \over dx}+{\partial J\over\partial x} }[/math] and only if the below expression

- [math]\displaystyle{ \int\left(f\left(x,y\right)+{\partial I\over\partial y}{dy \over dx}-{d \over dx}\left(I\left(x,y\right)-h\left(x\right)\right)\right)dx=\int \left(f\left(x,y\right)-{\partial \left(I\left(x,y\right)-h\left(x\right)\right)\over\partial x}\right)dx }[/math]

is a function solely of [math]\displaystyle{ x }[/math]. Once [math]\displaystyle{ h\left(x\right) }[/math] is calculated with its arbitrary constant, it is added to [math]\displaystyle{ I\left(x,y\right)-h\left(x\right) }[/math] to make [math]\displaystyle{ I\left(x,y\right) }[/math]. If the equation is exact, then we can reduce to the first order exact form which is solvable by the usual method for first-order exact equations.

- [math]\displaystyle{ I\left(x,y\right)+J\left(x,y\right){dy \over dx}=0 }[/math]

Now, however, in the final implicit solution there will be a [math]\displaystyle{ C_1x }[/math] term from integration of [math]\displaystyle{ h\left(x\right) }[/math] with respect to [math]\displaystyle{ x }[/math] twice as well as a [math]\displaystyle{ C_2 }[/math], two arbitrary constants as expected from a second-order equation.

Example

Given the differential equation

- [math]\displaystyle{ \left(1-x^2\right)y''-4xy'-2y=0 }[/math]

one can always easily check for exactness by examining the [math]\displaystyle{ y'' }[/math] term. In this case, both the partial and total derivative of [math]\displaystyle{ 1-x^2 }[/math] with respect to [math]\displaystyle{ x }[/math] are [math]\displaystyle{ -2x }[/math], so their sum is [math]\displaystyle{ -4x }[/math], which is exactly the term in front of [math]\displaystyle{ y' }[/math]. With one of the conditions for exactness met, one can calculate that

- [math]\displaystyle{ \int \left(-2x\right)dy=I\left(x,y\right)-h\left(x\right)=-2xy }[/math]

Letting [math]\displaystyle{ f\left(x,y\right)=-2y }[/math], then

- [math]\displaystyle{ \int \left(-2y-2xy'-{d \over dx}\left(-2xy \right)\right)dx=\int \left(-2y-2xy'+2xy'+2y\right)dx=\int \left(0\right)dx=h\left(x\right) }[/math]

So, [math]\displaystyle{ h\left(x\right) }[/math] is indeed a function only of [math]\displaystyle{ x }[/math] and the second order differential equation is exact. Therefore, [math]\displaystyle{ h\left(x\right)=C_1 }[/math] and [math]\displaystyle{ I\left(x,y\right)=-2xy+C_1 }[/math]. Reduction to a first-order exact equation yields

- [math]\displaystyle{ -2xy+C_1+\left(1-x^2\right)y'=0 }[/math]

Integrating [math]\displaystyle{ I\left(x,y\right) }[/math] with respect to [math]\displaystyle{ x }[/math] yields

- [math]\displaystyle{ -x^2y+C_1x+i\left(y\right)=0 }[/math]

where [math]\displaystyle{ i\left(y\right) }[/math] is some arbitrary function of [math]\displaystyle{ y }[/math]. Differentiating with respect to [math]\displaystyle{ y }[/math] gives an equation correlating the derivative and the [math]\displaystyle{ y' }[/math] term.

- [math]\displaystyle{ -x^2+i'\left(y\right)=1-x^2 }[/math]

So, [math]\displaystyle{ i\left(y\right)=y+C_2 }[/math] and the full implicit solution becomes

- [math]\displaystyle{ C_1x+C_2+y-x^2y=0 }[/math]

Solving explicitly for [math]\displaystyle{ y }[/math] yields

- [math]\displaystyle{ y= \frac{C_1x+C_2}{1-x^2} }[/math]

Higher order exact differential equations

The concepts of exact differential equations can be extended to any order. Starting with the exact second order equation

- [math]\displaystyle{ {d^2y \over dx^2}\left(J\left(x,y\right)\right)+{dy \over dx}\left({dJ \over dx}+{\partial J\over\partial x}\right)+f\left(x,y\right)=0 }[/math]

it was previously shown that equation is defined such that

- [math]\displaystyle{ f\left(x,y\right)={dh\left(x\right) \over dx}+{d \over dx}\left(I\left(x,y\right)-h\left(x\right)\right)-{\partial J\over\partial x}{dy \over dx} }[/math]

Implicit differentiation of the exact second-order equation [math]\displaystyle{ n }[/math] times will yield an [math]\displaystyle{ \left(n+2\right) }[/math]th order differential equation with new conditions for exactness that can be readily deduced from the form of the equation produced. For example, differentiating the above second-order differential equation once to yield a third-order exact equation gives the following form

- [math]\displaystyle{ {d^3y \over dx^3}\left(J\left(x,y\right)\right)+{d^2y \over dx^2}{dJ \over dx}+{d^2y \over dx^2}\left({dJ \over dx}+{\partial J\over\partial x}\right)+{dy \over dx}\left({d^2J \over dx^2}+{d \over dx}\left({\partial J\over\partial x}\right)\right)+{df\left(x,y\right) \over dx}=0 }[/math]

where

- [math]\displaystyle{ {df\left(x,y\right) \over dx}={d^2h\left(x\right) \over dx^2}+{d^2 \over dx^2}\left(I\left(x,y\right)-h\left(x\right)\right)-{d^2y \over dx^2}{\partial J\over\partial x}-{dy \over dx}{d \over dx}\left({\partial J\over\partial x}\right)=F\left(x,y,{dy \over dx}\right) }[/math]

and where [math]\displaystyle{ F\left(x,y,{dy \over dx}\right) }[/math] is a function only of [math]\displaystyle{ x,y }[/math] and [math]\displaystyle{ {dy \over dx} }[/math]. Combining all [math]\displaystyle{ {dy \over dx} }[/math] and [math]\displaystyle{ {d^2y \over dx^2} }[/math] terms not coming from [math]\displaystyle{ F\left(x,y,{dy \over dx}\right) }[/math] gives

- [math]\displaystyle{ {d^3y \over dx^3}\left(J\left(x,y\right)\right)+{d^2y \over dx^2}\left(2{dJ \over dx}+{\partial J\over\partial x}\right)+{dy \over dx}\left({d^2J \over dx^2}+{d \over dx}\left({\partial J\over\partial x}\right)\right)+F\left(x,y,{dy \over dx}\right)=0 }[/math]

Thus, the three conditions for exactness for a third-order differential equation are: the [math]\displaystyle{ {d^2y \over dx^2} }[/math] term must be [math]\displaystyle{ 2{dJ \over dx}+{\partial J\over\partial x} }[/math], the [math]\displaystyle{ {dy \over dx} }[/math] term must be [math]\displaystyle{ {d^2J \over dx^2}+{d \over dx}\left({\partial J\over\partial x}\right) }[/math] and

- [math]\displaystyle{ F\left(x,y,{dy \over dx}\right)-{d^2 \over dx^2}\left(I\left(x,y\right)-h\left(x\right)\right)+{d^2y \over dx^2}{\partial J\over\partial x}+{dy \over dx}{d \over dx}\left({\partial J\over\partial x}\right) }[/math]

must be a function solely of [math]\displaystyle{ x }[/math].

Example

Consider the nonlinear third-order differential equation

- [math]\displaystyle{ yy'''+3y'y''+12x^2=0 }[/math]

If [math]\displaystyle{ J\left(x,y\right)=y }[/math], then [math]\displaystyle{ y''\left(2{dJ \over dx}+{\partial J\over\partial x}\right) }[/math] is [math]\displaystyle{ 2y'y'' }[/math] and [math]\displaystyle{ y'\left({d^2J \over dx^2}+{d \over dx}\left({\partial J\over\partial x}\right)\right)=y'y'' }[/math]which together sum to [math]\displaystyle{ 3y'y'' }[/math]. Fortunately, this appears in our equation. For the last condition of exactness,

- [math]\displaystyle{ F\left(x,y,{dy \over dx}\right)-{d^2 \over dx^2}\left(I\left(x,y\right)-h\left(x\right)\right)+{d^2y \over dx^2}{\partial J\over\partial x}+{dy \over dx}{d \over dx}\left({\partial J\over\partial x}\right)=12x^2-0+0+0=12x^2 }[/math]

which is indeed a function only of [math]\displaystyle{ x }[/math]. So, the differential equation is exact. Integrating twice yields that [math]\displaystyle{ h\left(x\right)=x^4+C_1x+C_2=I\left(x,y\right) }[/math]. Rewriting the equation as a first-order exact differential equation yields

- [math]\displaystyle{ x^4+C_1x+C_2+yy'=0 }[/math]

Integrating [math]\displaystyle{ I\left(x,y\right) }[/math] with respect to [math]\displaystyle{ x }[/math] gives that [math]\displaystyle{ {x^5\over 5}+C_1x^2+C_2x+i\left(y\right)=0 }[/math]. Differentiating with respect to [math]\displaystyle{ y }[/math] and equating that to the term in front of [math]\displaystyle{ y' }[/math] in the first-order equation gives that [math]\displaystyle{ i'\left(y\right)=y }[/math] and that [math]\displaystyle{ i\left(y\right)={y^2\over 2}+C_3 }[/math]. The full implicit solution becomes

- [math]\displaystyle{ {x^5\over 5}+C_1x^2+C_2x+C_3+{y^2\over 2}=0 }[/math]

The explicit solution, then, is

- [math]\displaystyle{ y=\pm\sqrt{C_1x^2+C_2x+C_3-\frac{2x^5}{5}} }[/math]

See also

References

- ↑ Wolfgang Walter (11 March 2013). Ordinary Differential Equations. Springer Science & Business Media. ISBN 978-1-4612-0601-9. https://books.google.com/books?id=2jvaBwAAQBAJ&q=%22potential+function%22+exact.

- ↑ Vladimir A. Dobrushkin (16 December 2014). Applied Differential Equations: The Primary Course. CRC Press. ISBN 978-1-4987-2835-5. https://books.google.com/books?id=d-5MBgAAQBAJ&q=%22potential+function%22.

- ↑ Tenenbaum, Morris; Pollard, Harry (1963). "Solution of the Linear Differential Equation with Nonconstant Coefficients. Reduction of Order Method.". Ordinary Differential Equations: An Elementary Textbook for Students of Mathematics, Engineering and the Sciences. New York: Dover. pp. 248. ISBN 0-486-64940-7. https://archive.org/details/ordinarydifferen00tene_850.

Further reading

- Boyce, William E.; DiPrima, Richard C. (1986). Elementary Differential Equations (4th ed.). New York: John Wiley & Sons, Inc. ISBN:0-471-07894-8

|